The Information Strategy Group at Liverpool Business School, Liverpool John Moores University, offers courses and undertakes research in areas pertaining to information management, business information systems, communications and public relations, and library and information science.

Monday, 15 November 2010

New undergraduate degree programme: BSc Business Communications at LJMU

In BSc Business Communications at LJMU (UCAS Code: N102) students will study the strategic importance of communication, information and technology, and the role these play in the modern business organisation. Further information on the new programme can be found at our standalone BSc Business Communications website, the official LJMU BSc Business Communications website, or our Facebook group (BSc Business Communications at LJMU). BSc Business Communications is recruiting now for 2011/2012.

Tuesday, 2 November 2010

Crowd-sourcing faceted information retrieval

Blekko presents a fresh attempt to move web search forward, using a style of retrieval which has hitherto only been successful in systems based on pre-coordinated indexes and combining it with crowd-sourcing techniques. Interestingly, Rich Skrenta - co-founder of Blekko - was also a principal founder of the Dmoz project. Remember Dmoz? When I worked on BUBL years and years ago, I recall considering Dmoz to be an inferior beast. But it remains alive and kicking – and remains popular and relevant to modern web developments with weekly RDF dumps made of its rich, categorised, crowd-sourced content for Linked Data purposes. BUBL, on the other hand, has been static for years.

Flirting with taxonomical organisation and categorisation with Dmoz (as well as crowd-sourcing) has obviously influenced the Blekko approach to search. Blekko provides innovation in retrieval by enabling users to define their very own vertical search indexes using so-called 'slashtags', thus (essentially) providing a quasi form of faceted search. The advantage of this approach is that using a particular slashtag (or facet, if you prefer) in a query increases precision by removing 'irrelevant' results associated with different meanings of the search query terms. Sounds good, eh? Ranganathan would be salivating at such functionality in automatic indexing! To provide some form of critical mass, Blekko has provided hundreds of slashtags that can be used straight away; but the future of slashtags depends on users creating their own, which will be screened by Blekko before being added to their publicly available slashtags list. Blekko users can also assist in weeding out poor results and any erroneous slashtags results (see the video below) thus contributing to the improved precision Blekko purports to have and maintaining slashtag efficacy. In fact, Skrenta proposes that the Blekko approach will improve precision in the longer term. Says Skrenta on the BBC dot.Maggie blog:

"The only way to fix this [precision problem] is to bring back large-scale human curation to search combined with strong algorithms. You have to put people into the mix […] Crowdsourcing is the only way we will be able to allow search to scale to the ever-growing web".Let's look at a typical Blekko query. I am interested in the new Microsoft Windows mobile OS, and in bona fide reviews of the new OS. Moreover, since I am tech savvy and will have read many reviews, I am only interested in reviews published recently (i.e. within the past two weeks, or so). In Blekko we can search like so…

…where the /tech-reviews slashtag limits results to genuine reviews published in the technology press and/or associated websites, and the /date slashtag orders the results by date. It works, and works spectacularly well. Skrenta sticks two fingers up at his competitors when in the Blekko promotional video he quips, "Try doing this [type of] search anywhere else!" Blekko provides 'Five use cases where slashtags shine' which - although only using one slashtag - illustrate how the approach can be used in a variety of different queries. Of course, Blekko can still be used like a conventional search engine, e.g. enter a query and get results ranked according to the Blekko algorithm. And on this count – using my own personal 'search engine test queries' - Blekko appears to rank relevant results sensibly and index pages which other search engines either ignore or, if they do index them, normally drown in spam (spam results which these engines rank as more relevant).

There is a lot to admire about Blekko. Aside from an innovative approach to information retrieval, there is also a commitment to algorithm openness and transparency which SEO people will be pleased about; but I worry that while a Blekko slashtag search is innovative and useful, most users will approach Blekko as another search engine rather than buying into the importance of slashtags and, in doing so will not hang around long enough to 'get it' (even though I intend to...). Indeed, to some extent Blekko has more in common with command line searching of the online databases in the days of yore. There are also some teething troubles which rigorous testing can reveal. But there are reasons to be hopeful. Blekko is presumably hoping to promote slashtag popularity and have users following slashtags just as users follow Twitter groups, thus driving website traffic and presumably advertising. Being the owner of that slashtag could be useful, but also highly profitable, even if Blekko remains small.

blekko: how to slash the web from blekko on Vimeo.

Wednesday, 22 September 2010

New Google

There was a time (probably around two years ago) when updates to the OGB occurred every other week, and often the receipt of the RSS feed would compel me to post to this blog, such were the gravity of OGB announcements (see this, this and this, for example). However, in the past six months the OGB has been in overdrive. Almost every day a huge Google announcement is made on the OGB, whether it's the announcement of Google Instant or significant developments to Google Docs. Enter Google New, a new dedicated website to find all things new from Google. Here's the rationale from Google as published – yup, you guessed it – on the OGB:

"If it seems to you like every day Google releases a new product or feature, well, it seems like that to us too. The central place we tell you about most of these is through the official Google Blog Network [...] But if you want to keep up just with what’s new (or even just what Google does besides search), you’ll want to know about Google New. A few of us had a 20 percent project idea: create a single destination called Google New where people could find the latest product and feature launches from Google. It’s designed to pull in just those posts from various blogs."

Makes sense I suppose, eh?

Thursday, 9 September 2010

Web Teaching Day - 6 Sep 2010

On Monday, 6 Sept 2010 I attended a Web Teaching Day organised by Richard Eskins from Manchester Metropolitan University (his blog). We do a fair amount of web teaching in this group so I thought it would be useful to go along. Web teaching is undertaken by the Computing Department or the Art / Design department in most Universities and our courses tend to be very business orientated.

In many ways the conversations I had reminded me of those we have regarding Information Systems at John Moores. There's a problem relating to the range of skills required from basic technical skills, through design skills to high level inter personal skills. Our aim is to produce a "hybrid" graduate combining business with systems / technical skills. There's huge demand in industry for these graduates and our best students command very high salaries but students find the work hard and it difficult to recruit good students.

Web design / development courses have very similar aims.

Some of the highlights of the day:

Chris Mills from Opera talked about the Opera Web Standards Curriculum. He's been producing teaching material for students all of which is freely available on the internet. He also talked about Mozilla's P2PU (Peer to Peer University) programme - School of Webcraft which is aimed at delivering and assessing these skills. He's co-author on Interact with Web Standards: A Holistic Approach to Web Design (Voices That Matter).

David Watson from Greenwich University talked about the course he designed and runs - MA in Web Design and Content Planning. They've produced their own site to support the course. One interesting point he made is that he reckoned that this site had increased applicants to the course significantly, they now have 60 applicants for 20 places whereas before they struggled for numbers. He published his presentation here. He had some interesting observations on setting up and running the course. In particular don't depend on the University to market and recruit students to your course, you may end up with no students.

Christopher Murphy and Nik Persson (also known as the Web Standardistas) talked about their course BSc (Hons) Interactive Multimedia Design at Ulster University and the issues involved in delivering to undergraduates. I particularly liked their use of the nerd (Bill Gates) - designer (Steve Jobs) continuum to describe the difficulties of being a web builder and how you need so many disparate skills along this path. Here are some of the tools they recommend. Their book is HTML and CSS Web Standards Solutions: A Web Standardistas' Approach.

Aesha Zafar from the BBC talked about the new developments in Manchester and, in particular, the jobs that will be created there and Nicola Critchlow talked about the gap between industry's needs and graduates being produced by Universities (which is large and getting larger, nothing new there).

Finally, Andy Clarke, a freelance designer led a group discussion and chat at the end.

So what skills does a graduate from a web design / development course need? This is my list based on the nerd - designer continuum:

- databases

- server side programming languages (PHP seems to be in vogue though there are others)

- Javascript

- CSS

- HTML including web development tools

- graphics

- design

- people skills

It was an excellent day with lots of really inspiring speakers and it really got me fired up about the possibilities of delivering a web design / development course at John Moores. I don't believe that the course I want to offer exists here (though that's based on absolutely no research whatsoever!).

Our team has really strong skills in databases, programming, HTML, CSS and Javascript and we teach most of these skills at various levels. The people skills elements are taught throughout all our courses and is embedded in all JMU programmes via the World of Work (WoW) programme.

Our weakness is in design / graphics, however, Liverpool School of Art & Design has huge experience in areas such as graphic design and digital media.

So, here's a great opportunity to collaborate on a new course in an area that is growing in popularity.

Monday, 30 August 2010

Musical experiments with HTML5

HTML5 is still currently under development but is the next major revision of the HTML standard (as distinct from the recent incorporation of RDF, i.e. XHTML+RDFa). HTML5 will still be optimised for structuring and presenting content on the Web; however, it includes numerous new elements to better incorporate multimedia (which is currently heavily dependent on third party plug-ins), drag and drop functionality, improved support for semantic microdata, among many, many other things...

The Chrome Experiment entitled, 'The Wilderness Downtown', uses a variety of HTML5 building blocks. In their words:

"Choreographed windows, interactive flocking, custom rendered maps, real-time compositing, procedural drawing, 3D canvas rendering... this Chrome Experiment has them all. "The Wilderness Downtown" is an interactive interpretation of Arcade Fire's song "We Used To Wait" and was built entirely with the latest open web technologies, including HTML5 video, audio, and canvas."Being an 'experiment' it can be a little over the top, and I suppose it isn't an accurate reflection of how HTML5 will be used in practice. Nevertheless, it is certainly worth checking out - and I was quite impressed with canvas. An HTML5 compliant browser is required, as well as some time (it took 7 minutes to load!!!).

Monday, 23 August 2010

Jimmy Reid and the public library: an education like no other

| |

| Poster of shipyard workers at Titan. Image: G.Macgregor, CC rights. |

I recently visited the former site of John Brown & Company Shipbuilders. The builder of choice for Cunard Line, John Brown was one of the 40 shipyards that prospered on the Clyde and produced some of the most famous vessels the world has ever seen. The Queen Mary, Queen Elizabeth, the Lusitania, the Aquitania, the Britannia, HMS Hood, the QE2 – they were all built there. And although it's been turned into an excellent tourist attraction with the help of EU funding, it remains a tragically haunting place. 100 hectares of open space. The largest of the slipways remain, upon which the QE2 would have been launched into the Clyde for fitting out. The classic Titan cantilever crane has been restored too, giving a tiny glimpse of the scale and sheer majesty of the vessels being built at the yard. But it was in the final years of the 'good times' at the shipyards that Jimmy Reid grew up.

| RMS Queen Mary at Long Beach, California, now serving as a museum and hotel. Image: WPPilot, Wikimedia Commons, CC rights. |

"We are not going to strike. We are not even having a sit-in strike. Nobody and nothing will come in and nothing will go out without our permission. And there will be no hooliganism, there will be no vandalism, there will be no bevvying because the world is watching us, and it is our responsibility to conduct ourselves with responsibility, and with dignity, and with maturity."This unique industrial action had integrity and was successful in the short-term, attracting international attention, sympathy and financial support (most notably from John Lennon and Yoko Ono). It is also why BAE Systems today have two ex-UCS yards on the Clyde, currently building the brand new high-end Type 45 Destroyers for the Royal Navy. Ultimately though, shipbuilding in Glasgow and on the Clyde today is a shade of its former self.

|

| Titan cantilever crane at the former John Brown shipyard, Clydebank. Image: G.Macgregor, CC. rights |

"Our education was football, his education was the Govan library. He was never out of there."Public libraries are often considered "the people's university". When Reid was made Rector of the University of Glasgow, "pompous" academics would ask which university he attended, to which Reid would reply: "Govan Library"! The public library moulded Reid, provided an education like no other and helped him develop an intellect which Sir Michael Parkinson described as "formidable". Reid personified the public library mission of education and lifelong learning available to all, regardless of age, skill level, or ability to pay. I have many issues with the running of public libraries today (some of which I might discuss in a future blog), but their importance in creating people like Jimmy Reid across Britain, and elsewhere in the world, can never be forgotten. And I'm not necessarily talking about people of Reid's political persuasion; but the importance of providing fantastic opportunities for education, enlightenment and betterment – and escape. The wealth of the Govan area during its shipbuilding heyday is reflected in its public buildings. The magnificent Town Hall, for example, with its neo-classical decoration, overlooking the quasi-futuristic architecture of Glasgow's redeveloped waterfront (and now used as a recording HQ for Franz Ferdinand). And Govan Public Library (or Elder Park Library as it is officially known) is no exception; a beautiful Victorian listed building situated within Elder Park. What a great location to build a public library; inviting local residents to escape the noise and dirt of shipbuilding and marine engineering to enjoy its salubrious surroundings and architectural splendour while reading an improving book! The Victorians had style - and they recognised the importance of the public library as an institution.

|

| Elder Park Library, front elevation. Image: mike.thomson75. |

It is a dangerous time for public libraries. Because they are such established institutions, people often take for granted that they will always exist, come rain or shine. Yet, the role of public libraries as the people's university remains as important as ever, not only to promote social inclusion and enable vulnerable people in society to engage with civil society, but providing opportunities for lifelong learning in dire economic circumstances. If some should go, where will the Jimmy Reids of tomorrow go? Some will argue that the increased penetration of broadband (circa 65%) makes some public libraries dispensable, but what about the remaining 35%, or those that are suddenly made redundant and can no longer afford their broadband bill? Where do they go if they want to develop knowledge in a particular discipline, or learn about IT? And just because someone has broadband does not mean that they can get access to all the information they might require (bibliographic databases?), or that the information will be any good. The Telegraph reckons Jimmy Reid's life would make a great biopic. I agree, so long as the splendour of Elder Park Library is maintained and we have plenty clips of Jimmy perusing the book stacks.

Thursday, 19 August 2010

Where are the WarGames students?

One type of warfare which all commentators agreed was potentially imminent is cyber warfare. Not only is such warfare potentially imminent, but the UK (along with other NATO allies) is completely unprepared for a sophisticated or sustained attack. According to the programme only 24 people at the MoD are actively working on cyber security(!). Commentators agreed that funding had to be diverted from other armed services (i.e. RAF) to invest in cyber security. This means more advanced computing and information professionals to improve cyber security, but also to operate un-manned drones, manipulate intelligence data, and so forth. 'More Bill Gates-type recruits and fewer soldiers' was the message.

Although it wasn't given treatment in the programme, the conundrum for our cyber security – as well as our economy - is the declining numbers of students seeking to study computing science and information science at undergraduate/postgraduate level. This is a decline which is reflected more generally in the lack of school leaver interest in science and technology, something which – unless you have been living in a cave – the last Labour government and the current coalition are attempting to address. With the release of A-level results today and the massive demand for university places this year, some universities have been boosted by government grants designed to recruit extra students in science and technology. The coalition, in particular, sees it as a way of improving economic growth prospects; but it seems that the need to reverse this trend has become even more urgent given that we only have a small mini-bus full of 'cyber soldiers' – and, let's face it, five are probably on part-time contracts, two will be on maternity leave and one will be on long term sick leave.

It's a far cry from the 1980s Hollywood classic, 'WarGames' (1983). WarGames follows a young hacker (Matthew Broderick) who inadvertently accesses a US military supercomputer programmed to predict possible outcomes of nuclear war. Taking advantage of the unbelievably simple command language interface ("Can we play a global thermonuclear simulation game?" types Broderick) and Artificial Intelligence (AI) light years ahead 2010 state of the art, Broderick manages to initiate a nuclear war simulation believing it to be an innocent computer game. Of course, Broderick's shenanigans cause US military panic and almost cause World War III. I remember going to the petrol station with my father to rent WarGames on VHS as soon as it was available (yes – in the early 1980s petrol stations were often the place to go for video rentals! I suppose the video revolution was just kicking off...) and being thoroughly inspired by its depiction of computing and hacking. I wanted to be a hacker and was lucky enough to receive an Atari 800XL that Christmas, although programming soon gave way to gaming. Pac-Man anyone? Missile Command was pretty good too...

Monday, 26 July 2010

The end of social networking or just the beginning?

"I don't think anyone is going to build a social network from scratch whose only purpose is to connect people. We've got Facebook (personal), LinkedIn (business) and Twitter (SMS-length for mobile)."Huh. Maybe he's right? The monopolisation of the social networking market is rather unfortunate and, I suppose, rather unhealthy - but it is probably and ultimately necessary owing to the current business models of social media (i.e. you've got to have a gargantuan user base to turn a profit). The 'big three' (above) have already trampled over the others to get to the top out of necessity.

However, Arthur's suggestion is that 'standalone' social networking websites are dead, rather than social networking itself. Social networking will, of course, continue; but it will be subsumed into other services as part of a package. How successful these will be is anyone's guess. This situation is contrary to what many commentators forecast several years ago. Commentators predicted an array of competing social networks, some highly specialised and catering for niche interests. Some have already been and gone; some continue to limp on, slowly burning the cash of venture capitalists. Researchers also hoped - and continue to hope - for open applications making greater use of machine readable data on foaf:persons using, erm, FOAF.

The bottom line is that it's simply too difficult to move social networks. For a variety reasons, Identi.ca is generally acknowledged to be an improvement on Twitter, offering greater functionality and open-source credentials (FOAF support anyone?); but persuading people to move is almost impossible. Moving results in a loss of social capital and users' labour, hence recent work in metadata standards to export your social networking capital. Yet, it is not in the interests of most social networks to make users' data portable. Monopolies are therefore always bound to emerge.

But is privacy the elephant in the room? Arthur's article omits the privacy furore which has pervaded Facebook in recent months. German data protection officials have launched a legal assault on Facebook for accessing and saving the personal data of people who don't even use the network, for example. And I would include myself in the group of people one step away from deleting his Facebook account. Enter diaspora: diaspora (what a great name for a social network!) is a "privacy aware, personally controlled, do-it-all, open source social network". The diaspora team vision is very exciting and inspirational. These are, after all, a bunch of NYU graduates with an average age of 20.5 and ace computer hacking skills. Scheduled for a September 2010 launch, diaspora will be a piece of open-source personal web server software designed to enable a distributed and decentralised alternative to services such as Facebook. Nice. So, contrary to Arthur's article, there are a new, innovative, standalone social networks emerging and being built from scratch. diaspora has immense momentum and taps into the increasing suspicion that users have of corporations like Facebook, Google and others.

Sadly, despite the exciting potential of diaspora, I fear they are too late. Users are concerned about privacy. It is a misconception to think that they aren't; but valuing privacy over social capital is a difficult choice for people that lead a virtual existence. Jettison five years of photos, comments, friendships, etc. or tolerate the privacy indiscretions of Facebook (or other social networks)? That's the question that users ask themselves. It again comes down to data portability and the transfer of social capital and/or user labour. diaspora will, I am sure, support many of the standards to make data portability possible, but will Facebook make it possible to output and export your data to diaspora? Probably not. I nevertheless watch the progress of diaspora closely and I hope, just hope they can make it a success. Good luck, chaps!

Monday, 19 July 2010

Google finally gets serious about the Semantic Web?

Google has this week announced the purchase of Metaweb Technologies. None the wiser?! Metaweb is perhaps most known for providing the Semantic Web community with Freebase. Freebase cropped up last year on this blog when we discussed the emergence of Common Tags. Freebase essentially represents a not insignificant hub in the rapidly expanding Linked Data cloud, providing RDF data on 12 million entities with URIs linking to other linked and Semantic Web datasets, e.g. DBpedia.

My comments are limited to the above; just thought this was probably an extremely important development and one to watch. A high level of social proof appears to be required before some tech firms or organisations will embrace the Semantic Web. But what greater social proof than Google? Google also appear committed to the Freebase ethos:

"[We] plan to maintain Freebase as a free and open database for the world. Better yet, we plan to contribute to and further develop Freebase and would be delighted if other web companies use and contribute to the data. We believe that by improving Freebase, it will be a tremendous resource to make the web richer for everyone. And to the extent the web becomes a better place, this is good for webmasters and good for users."Very significant stuff indeed.

Tuesday, 13 July 2010

iStrain?

Nielsen's research motivation was clear: e-book readers and tablets are finally growing in popularity and they are likely to become an important means of engaging in long-form reading in the future. However, such devices will only succeed if they are better than reading from PC or laptop screens and - the mother of all reading devices - the printed book. Nielsen and his assistants therefore performed a readability study of tablets, including the Apple's iPad and Amazon's Kindle, and compared these with books. You can read the article in full in your own time. It's a brief read at circa 1000 words. Essentially, Nielsen's key findings were that reading from a book is significantly quicker than reading from tablet devices. Reading from the iPad was found to be 6.2% slower and the Kindle 10.7% slower.

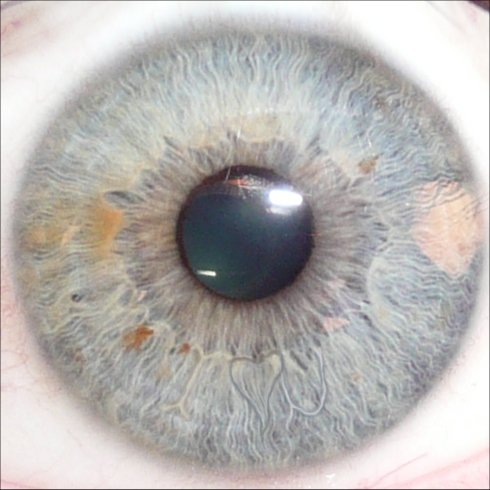

I recall ebook readers emerging in the late 1990s. At that time ebook readers were mysterious but exciting devices. After some above average ebook sales for a Stephen King best seller in 1999/2000 (I think), it was predicted that ebook readers would take over the publishing industry. But they didn't. The reasons for this were/are complex but pertain to a variety of factors including conflicting technologies, lack of interoperability, poor usability and so forth. There were additional issues, many of which some of my ex-colleagues investigated with their EBONI project. One of the biggest factors inhibiting their proliferation was the issue of eye strain. The screens on early ebook readers lacked sufficient resolution and were simply small computer screens which came with the associated eye strain issues for long-form reading, e.g. glare, soreness of the eyes, headaches, etc. Long-form reading was simply too unpleasant; which is why the emergence of the Kindle, with its use of e-ink, was revelatory. The Kindle - and readers like it - have been able to simulate the printed word such that eye strain issues are no longer an issue.

Nielsen has already attracted criticism regarding flaws in his methodology; but in his defence he did not purport his study to be rigorously scientific, nor has he sought publication of his research in the peer-reviewed research literature. He wrote up his research in 1000 words for his website, for goodness sake! In any case, his results were to be expected. Applegeeks will complain that he didn't use enough participants, although those familiar with the realities of academic research will know that 30-40 participant user studies are par for the course. However, there is one assumption in Nielsen's article which is problematic and which has evaded discussion: iStrain. Yes - it's a dreadful pun but it strikes at the heart of whether these devices are truly readable or not. Indeed, how conducive can a tablet or reader be for long-form reading if your retinas are bleeding after 50 minutes reading? Participants in Nielsen's experiment were reading for around 17 minutes. Says Nielsen:

"On average, the stories took 17 minutes and 20 seconds to read. This is obviously less time than people might spend reading a novel or a college textbook, but it's much longer than the abrupt reading that characterizes Web browsing. Asking users to read 17 minutes or more is enough to get them immersed in the story. It's also representative for many other formats of interest, such as whitepapers and reports."...All of which is true, sort of. But in order to assess long-form reading participants need to be reading for a lot, lot longer than 17 minutes, and whilst the iPad enjoys a high screen resolution and high levels of user satisfaction, how conducive can it be to long-form reading? And herein lies a problem. The iPad was never really designed as an e-reader. It is a multi-purpose mobile device which technology commentators - contrary to all HCI usability and ergonomics research - seem to think is ideally suited to long-form reading. It may rejuvenate the newspaper industry since this form of consumption is similar to that explained above

by Nielsen, but the iPad is ultimately no different to the failed e-reading technologies of ten years ago. In fact, some might say it is worse. I mean, would you want to read P.G. Wodehouse through smudged fingerprints?! The results of Nielsen's study are therefore interesting but they could have been more informative had participants been reading for longer. A follow up study is order of the day and would be ideally suited to an MSc dissertation. Any student takers?!

(Image (eye): Vernhart, Flickr, Creative Commons Attribution-NonCommercial-ShareAlike 2.0 Generic)

(Image (beware): florian.b, Flickr, Attribution-NonCommercial 2.0 Generic)

Monday, 28 June 2010

Love me, connect me, Facebook me

Being a Facebook group page it is accessible to everyone; however, those of you with Facebook accounts can become fans to be kept abreast of programme news, events, research activity, industry developments and so forth. Click the "Like it!" button!

Wednesday, 23 June 2010

Visualising the metadata universe

As evidenced by some of my blogs, there are literally hundreds of metadata standards and structured data formats available, all with their own acronym. This seems to have become more complicated with the emergence of numerous XML based standards in the early to mid noughties, and the more recent proliferation of RDF vocabularies for the Semantic Web and the associated Linked Data drive. What formats exists? How do they relate to each other? For which communities of practice are they optimised, e.g. information industry or cultural sector? What are the metadata, technical standards, vocabularies that I should be congnisant of in my area? And so the question list goes on...

These questions can be difficult to answer, and it is for this reason that Jenn Riley has produced a gigantic poster diagram (above) entitled, 'Seeing standards: a visualization of the metadata universe'. The diagram achieves what a good model should, i.e. simplifying complex phenomena and presenting a large volume of information in a condensed way. As the website blurb states:

"Each of the 105 standards listed here is evaluated on its strength of application to defined categories in each of four axes: community, domain, function, and purpose. The strength of a standard in a given category is determined by a mixture of its adoption in that category, its design intent, and its overall appropriateness for use in that category."A useful conceptual tool for academics, practitioners and students alike. A glossary of metadata standards in either poster or pamphlet form is also available.

Tuesday, 22 June 2010

Goulash all round: Linked Data at NSZL

The RDF vocabularies used include Dublin Core RDF for bibliographic metadata, SKOS for subject indexing (in a variety of terminologies) and FOAF for name authority data. Incredible! Not only that, the FOAF descriptions include mapped owl:sameAs statements to corresponding dbpedia URIs. For example, here is FOAF data pertaining to Hungarian novelist, Jókai Mór:

<?xml version="1.0"?>

<rdf:RDF

xmlns:dbpedia="http://dbpedia.org/property/"

xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#"

xmlns:skos="http://www.w3.org/2004/02/skos/core#"

xmlns:foaf="http://xmlns.com/foaf/0.1/"

xmlns="http://web.resource.org/cc/"

xmlns:owl="http://www.w3.org/2002/07/owl#"

xmlns:dc="http://purl.org/dc/elements/1.1/"

xmlns:zs="http://www.loc.gov/zing/srw/">

<foaf:Person rdf:about="http://nektar.oszk.hu/resource/auth/33589">

<dbpedia:deathYear>1904</dbpedia:deathYear>

<dbpedia:birthYear>1825</dbpedia:birthYear>

<foaf:familyName>Jókai</foaf:familyName>

<foaf:givenName>Mór</foaf:givenName>

<foaf:name>Jókai Mór (1825-1904)</foaf:name>

<foaf:name>Mór Jókai</foaf:name>

<foaf:name>Jókai Mór</foaf:name>

<owl:sameAs rdf:resource="http://dbpedia.org/resource/M%C3%B3r_J%C3%B3kai"/>

</foaf:Person>

</rdf:RDF>

Visit the above noted dbpedia data for fun.

Rich SKOS data is also available for a local information retrieval thesaurus. Follow this link for an example of the skos:prefLabel ' magyar irodalom'.

It's a herculean effort from the NSZL which must be commended. And before the Germans did it too! Goulash all round to celebrate - and a photograph of the Hungarian Parliament, methinks.

Tuesday, 30 March 2010

Social media and the organic farmer

There were some interesting discussions about the fact that online grocery sales grew by 15% last year (three times more than 'traditional' grocery sales) and the role of the web and social media in the disintermediation of supermarkets as the principal means of getting 'artisan foods' to market. Some great success stories were discussed, such as Rude Health and the recently launched Virtual Farmers' Market. However, the familiar problem which the programme highlighted – and the problem which has motivated this blog posting – is the issue of measuring the impact and effectiveness of social media as a marketing tool. Most small businesses had little idea how effective their social media 'strategies' had been and, by the sounds of it, many are randomly tweeting, blogging and setting up Facebook groups in a vein attempt to gain market traction. One commentator (Philip Lynch, Director of Media Evaluation) from Kantar Media Intelligence spoke about tracking "text footprints" left by users on the social web which can then be quantified to determine the level support for a particular product or supplier. He didn't say much more than that, probably because Kantar's own techniques for measuring impact are a form of intellectual property. It sounds interesting though and I would be keen to see it action.

But the whole reason for the 'To Tweet or not to Tweet' discussion in the first place was to explore the opportunities to be gleaned by 'artisan food' producers using social media. These are traditionally small businesses with few capital resources and for whom social media presents a free opportunity to reach potential customers. Yet, the underlying (but barely articulated) theme of many discussions on the Food Programme was that serious investment is required for a social media strategy to be effective. The technology is free to use but it involves staff resource to develop a suitable strategy, and a staff resource with the communications and technical knowledge. On top of all this, small businesses want to be able to observe the impact of their investment on sales and market penetration. Thus, in the end, it requires outfits like Kantor to orchestrate a halfway effective social media strategy, maintain it, and to measure it. Anything short of this will not necessarily help drive sales and may be wholly ineffective. (I can see how social media aficionado Keith Thompson arrived at a name for his blog – any thoughts on this stuff, Keith?) The question therefore presents itself: Are food artisans, or any small business for that matter, being suckered by the false promise of free social media?

Of course, most of the above is predicated upon the assumption that people like me will continue to use social media such as Facebook; but while it continues to update its privacy policy, as it suggested this week on its blog that it will, I will be leaving social media altogether. 'Opting in' for a basic level of privacy should not be necessary.

Monday, 29 March 2010

Students' information literacy: three events collide with cosmic significance...

Firstly, I completed marking student submissions for Business Information Management (LBSIS1036). This is a level one module which introduces web technologies to students; but it is also a module which introduces information literacy skills. These skills are tested in an in-lab assessment in which students can demonstrate their ability to critically evaluate information, ascertain provenance, IPR, etc. To assist them the students are introduced to evaluation methodologies in the sessions preceding the assessment which they can use to test the provenance of information sources found on the 'surface web'.

Students' performance in the assessment was patchy. Those students that invested a small amount of time studying found themselves with marks within the 2:1 to First range; but sadly most didn't invest the preparation time and found themselves in the doldrums, or failing altogether. What was most revealing about their performance was the fact that – despite several taught sessions outlining appropriate information evaluation methodologies – a large proportion of students informed me in their manuscripts that their decision to select a resource was not because i

t fulfilled particular aspects of their evaluation criteria, but because the resource featured in the top five results within Google and therefore must be reliable. Indeed, the evaluation criteria were dismissed by many students in favour of the perceived reliability of Google's PageRank to provide a resource which is accurate, authoritative, objective, current, and with appropriate coverage. Said one student in response to 'Please describe the evaluation criteria used to assess the provenance of the resource selected': "The reason I selected this resource is that it features within the top five results on Google and therefore is a trustworthy source of information".

t fulfilled particular aspects of their evaluation criteria, but because the resource featured in the top five results within Google and therefore must be reliable. Indeed, the evaluation criteria were dismissed by many students in favour of the perceived reliability of Google's PageRank to provide a resource which is accurate, authoritative, objective, current, and with appropriate coverage. Said one student in response to 'Please describe the evaluation criteria used to assess the provenance of the resource selected': "The reason I selected this resource is that it features within the top five results on Google and therefore is a trustworthy source of information".Aside from the fact these students completely missed the point of the assessment and clearly didn't learn anything from Chris Taylor or me, it strikes fear in the heart of a man that these students will continue their academic studies (and perhaps their post-university life) without the most basic information literacy skills. It's a depressing thought if one dwells on it for long enough. On the positive side, only one student used Wikipedia...which leads me to the next cosmic event...

Last week I was doing my periodic 'catch up' on some recent research literature. This normally entails scanning my RSS feeds for recently published papers in the journals and flicking through the pages of the recent issues of the Journal of the American Society for Information Science and Technology (JASIST). A paper published in JASIST at the tail end of 2009 caught my eye: 'How and Why Do College Students Use Wikipedia?' by Sook Lim which, compared to the hyper scientific paper titles such as 'A relation between h-index and impact factor in the power-law model' or 'Exploiting corpus-related ontologies for conceptualizing document corpora' (another interesting paper), sounds quite magazine-like. Lim investigated and analysed data on students' perceptions, uses of, and motivations for using Wikipedia in order to better understand student information seeking behaviour. She employed frameworks from social cognitive theory and 'uses and gratification' literature. Her findings are too detailed to summarise here. Suffice to say, Lim found many students to use Wikipedia for academic purposes, but not in their academic work; rather, students used Wikipedia to check facts and figures quickly, or to glean quick background information so that they could better direct their studying. In fact, although students found Wikipedia to be useful for fact checking, etc., their perceptions of its information quality were not high at all. Students knew it to be a suspect source and were sceptical when using it.

After the A&E experience of marking the LBSIS1036 submissions, Lim's results were fantastic news and my spirits were lifted immediately. Students are more discerning than we give them credit for, I thought to myself. Fantastic! 'Information Armageddon' doesn't await Generation Y after all. Imagine my disappointment the following morning when I boarded a train to Liverpool Central to find myself seated next to four students. It was here that I would experience my third cosmic event. Gazing out the train window as the sun was rising over Bootle docks and the majesty of its containerisation, I couldn't help but listen to the students as they were discussing an assignment which they had all completed and were on their journey to submit. The discussion followed the usual format, e.g. "What did you write in your essay?" "How did you structure yours?", etc. It then emerged that all four of them had used Wikipedia as the principal source for their essay and that they simply copied and pasted passages verbatim. In fact, one student remarked, "The lecturer might get suspicious if you copy it directly, so all I do is change the order of any bullet points and paragraphs. I change some of the words used too". (!!!!!!!!!!)

My hope would be that these students get caught cheating because, even without using Turnitin, catching students cheating with sources such as Wikipedia is easy peasy. But a bigger question is whether information literacy instruction is a futile pursuit? Will instant gratification always prevail?

Image: Polaroidmemories (Flickr), CreativeCommons Attribution-Non-Commercial-Share Alike 2.0 Generic

Friday, 26 March 2010

Here cometh the pay wall: the countdown begins...

The trouble is that few yet have the gumption to do it. Murdoch, I suspect, is one of several who thinks that once there is a critical mass of high profile content providers implementing pay walls then there will be deluge of others. And I think he is probably correct in this assumption. After all, subscription can actually work. The FT and Wall Street Journal have successfully had subscription models for years (although they admittedly provide an indispensible service to readers in the financial and business sectors). An additional benefit of pay wall proliferation will be the simultaneous decline of news aggregators (which Murdoch has been particularly vexed about recently) and 'citizen journalists', both of which have contributed to the ineffectiveness of advertising as a business model for online newspapers. The truth is that the future of good journalism depends on the success of these subscription-based business models; but the success of this also has implications for other content providers or Internet services experiencing similar problems, social networking services being a prime example.

If you take the time to peruse the page created by BBC News to collect user comments on this story, a depressingly long slew of comments can be found in which it becomes clear that most users (not all, it should be noted) have a huge difficulty with subscription models or simply do not understand what the business problem is. And largely this is down to the fact that most ordinary people think:

- That content providers of all types, not just newspapers, are a bunch of rip-off merchants who are dissatisfied with their lot in the digital sphere;

- That content providers generate abnormal profits from advertising revenue and that their businesses are based on robust business models, and

- That free content, aggregation and 'citizen journalists' fulfil their news or content needs admirably and that high quality journalism is therefore superfluous.

The real truth of course is that newspapers are losing tremendous amounts of money. It seems to be unfashionable to say it – even the great but struggling Guardian chokes on these words – but free can no longer continue. Newspapers across the world have restructured and reinvented themselves to cope with the digital world. But there is only so much rearranging of the Titantic's deck chairs that can occur. The bottom line is that advertising as a business model isn't a business model. (See this, this and this for previous musings on this blog). Facebook is set to be 'cash flow positive' for the first time this financial year. No-one knows how much profit it will generate, although economic analysts suspect it will be small. What does this say about the viability of advertising as a revenue stream when a service with over 500 million users can barely cover costs? But what are Facebook to do? Chris Taylor conducted an unscientific survey with some International MBA students last week, all of whom reported positively on their continued use of Facebook to connect with family and friends at home and within Liverpool Business School. The question was: Would you be willing to pay £3 per annum to access Facebook? The response was unanimous: 'No'.

Sigh.

Thursday, 25 March 2010

How much software is there in Liverpool and is it enough to keep me interested

In particular I am thinking about my home market in Liverpool where I am hoping to continue to carve some kind of career in my day job. If there is not enough 'S' to keep me going for another 25 years I'm going to get bored, poor or a job at McDonalds.

'S' maintenance is only a relatively minor destination for our business information systems students (still the students with the highest exit salary in the business school I am told please send yourself and your children BIS Course), but it is important to me.

Back in the day job we are working on developing LabCom a business to business tracking system for chemical samples and their results. One of the things that appeals to my mind is the fact that it is building a machine that is making things happen. I like to see how many samples are processed on it a year. Sad I know. We are delivering various new modules that will hopefully allow it all to grow. However thus far the project is not really big enough to fund the level of technical development and architecture expertise we would like to deploy, not enough 'S' to on its own maintain and fund high level development capabilities. The alternative for software developers such as my team is to engage in shorter term development consultancy forays, but for these to be of sustained technical interest they have probably got to add up to 100K or more and alas we have not worked out how to regularly corner such jobs.

With few software companies in Liverpool I wonder how this translates into the bigger picture and whether we can measure it.

There is definitely some 'S' in Liverpool, I did some work a couple of years back looking at software architecture with my friends at New Mind who are a national leader in destination management services and have a big crunching bit of software behind it. Angel Solutions are another company with a national footprint, this time in the education sector, backed by source code controlled in Liverpool. I have come across only two or three others over the years although no doubt there are some hiding. For someone trying to make a career out of having the skills to understand and develop big software this lack of available candidates in Liverpool might be a bit of a problem. A bit like getting an advanced mountaineering certificate in Norfolk ( see news Buscuit).

With this in mind I wondered is there more or less 'S' under management in Liverpool than elsewhere. Is this really the Norfolk of mountaineering.

How can we know. There are some publicly available records, we could dig up finances of software companies and similar, although most of these companies (including my own) are principally guns for hire engaging in consultancy and development services.

Liverpool's Software/New Media industry has a number of great companies such as Liverpool Business School Graduate led Mando Group and Trinity Mirror owned Ripple Effect and the only big player Strategic System Solutions. However as I understand it these are service delivery and consultancy companies not software product development companies they make their money through expertese, they contain a relatively low proportion of 'S'. Probably the largest block of software under management will be in the IT departments no doubt they have a bit of 'S'.

So how could we weigh this here in Liverpool or elsewhere. How much software is there. Lots of small companies such as my own have part of their income from owned source code IP 'S'. There are the few larger ones. So we could try to get a list and determine what proportion of income is generated from these outfits based on public records and a little inside knowledge. We could perhaps measure the number of software developers deployed or the traditional measure of SLOC (Source Lines Of Code). I've in the past looked at variants on Mark II Function Point Analysis you can find out a little about this on the United Kingdom Software Metrics Association website . In my commercial world I’m interested in estimating cost hence toying with these methods while we ponder what we can get away with charging and is it more than the our estimated (guessed) cost. In this regional context I’m interested in whether we can measure how much value there is lurking to give a figure for 'S'.

Imagine we did measure that the quantity of commercial code under management in Liverpool was I’ll call it 'Liver-S', I then want to know how this compares to Manchester's 'Manc-S' (I suspect unfavourably) and perhaps more importantly from a career and commercial point of view how it compares to last year. Is 'Liver-S' getting bigger (what is 'delta Liver-S').

The importance of 'S' and 'delta S' is about whether we are maintaining enough work to maintain or indeed develop a capacity to ‘do big software’ in the local economy. Without which to be honest I’m going to get bored.

Any masters/mba students stuck for a bit of an assignment or even better funding bodies wanting to help me answer this question please drop me a line.

If there is not enough 'Liver-S' in the future at least I'll be able to sit at home with my super fast broadband.

Tuesday, 2 February 2010

The 'real' iPad

The video, publicised by BoingBoing, features one of Liverpool's funniest chaps: Peter Serafinowicz. Serafinowicz has managed to release an iPad parody (days after its launch by Apple) in order to plug the release of his latest DVD. He's quick off the mark. Serafinowicz has often included Apple parodies in his shows, such as the iToilet and the MacTini. This follows a similar format and is similarly amusing. Enjoy!

Tuesday, 26 January 2010

Renaissance of thesaurus-enhanced information retrieval?

As good as they are these days, retrieval systems based on automatic indexing (i.e. most web search engines, including Google, Bing, Yahoo!, etc.) suffer from the 'syntax problem'. They provide what appears to be high recall coupled with poor precision. This is the nature of search the engine beast. Conceptually similar items are ignored because such systems are unable to tell that 'Haematobia irritans' is a synonym of 'horn flies' or that 'java' is a term fraught with homonymy (e.g. 'java' the programming language, 'java' the island within the Indonesian archipelago and 'java' the coffee, and so forth). All of the aforementioned contributes to arguably the biggest problem for user searching: query formulation. Search engines suffer from the added lack of any structured browsing of, say, resource subjects, titles, etc. to assist users in query formulation.

This blog has discussed query formulation in the search process at various times (see this for example). The selection of search terms for query formulation remains one of the most difficult stages in users' information retrieval process. The huge body of research relating to information seeking behaviour, information retrieval, relevance feedback, and human-computer interaction attests to this. One of the techniques used to assist users in query formulation is thesaurus assisted searching and/or query expansion. Such techniques are not particularly new and are often used in search systems successfully (see Ali Shiri's JASIST paper from 2006).

Last week, however, Google announced adjustments to their search service. This adjustment is particularly significant because it is an attempt to control for synonyms. Their approach is based on 'contextual language analysis' rather than the use of information retrieval thesauri. The blog reads:

"Our systems analyze petabytes of web documents and historical search data to build an intricate understanding of what words can mean in different contexts [...] Our synonyms system is the result of more than five years of research within our web search ranking team. We constantly monitor the quality of the system, but recently we made a special effort to analyze synonyms impact and quality."Firstly, this is certainly positive news. Synonyms – as noted above – are a well known phenomenon which has blighted the effectiveness of automatic indexing in retrieval. But on the negative side – and not to belittle Google's efforts as they are dealing with unstructured data - Google are only dealing with single words. 'Song lyrics' and 'song words', or 'homocide' and 'murder' (examples provided from Google on their blog posting) They are dealing with words in a Roget's Thesaurus sense, rather than compound terms in an information retrieval thesaurus sense – and it is the latter which will ultimately be more useful in improving recall and precision. This is, after all, why information retrieval thesauri have historically been used in searching.

More interesting will be Google's exploration of homonymous terms. Homonyms are more complex that synonyms and are, perhaps for the foreseeable future, an intractable problem?

Friday, 15 January 2010

Death of the book salesman...

Shopping in Glasgow prior to Christmas was a sad time. Borders, which occupied what is reputed to be the most expensive retail space in Glasgow (the old Royal Bank of Scotland building), announced that it was in administration and was flogging all stock in a gargantuan clearance sale. Borders had become an institution since it opened on Buchannan Street in 1997 (I think) and I'm sure branches in other cities were similarly iconic and located at city centre hot-spots, the London Oxford Street branch being another prime example. It was a great place to meet friends before heading out for dinner or drinks; perusing the amazing magazine or newspaper selection, or browsing the books or music. Of course, I stopped buying books there years ago because the genre classification they used made it impossible to find anything; but it nevertheless occupied a special place in my heart...

Shopping in Glasgow prior to Christmas was a sad time. Borders, which occupied what is reputed to be the most expensive retail space in Glasgow (the old Royal Bank of Scotland building), announced that it was in administration and was flogging all stock in a gargantuan clearance sale. Borders had become an institution since it opened on Buchannan Street in 1997 (I think) and I'm sure branches in other cities were similarly iconic and located at city centre hot-spots, the London Oxford Street branch being another prime example. It was a great place to meet friends before heading out for dinner or drinks; perusing the amazing magazine or newspaper selection, or browsing the books or music. Of course, I stopped buying books there years ago because the genre classification they used made it impossible to find anything; but it nevertheless occupied a special place in my heart...The official line is that Borders fell victim to the current economic climate, although it was a complicated concatenation of economic circumstances, including aggressive competition from online retailers and particularly supermarkets (those supermarkets again – a £3 copy of the latest Jordan autobiography anyone?), a sales downturn and, finally, a lack of credit from suppliers. Waterstone's remains the only national bookseller but today was responsible for a decline in the share price of HMV as their Chief Executive tries to administer 'bookshop CPR' (i.e. let's make our stores more cosy). Can we expect the closure of it too in the foreseeable future? That would be extremely depressing...

Of course it's all depressing news; but one can't help thinking that the demise of super-selling bookshops was a quagmire of their own making. The Net Book Agreement (NBA) – the 100 year long (almost) price fixing of books which collapsed in 1995 – was precipitated by Waterstone's in the first place. And it was precisely the collapse of the NBA which enticed Borders to the UK and enabled Amazon to establish UK operations. Both retailers would not have been able to operate with the NBA still in operation (remember the big Amazon book discounts in the late 1990s?). I suppose none of the book retailers anticipated the level of competitive aggression they had unleashed, particularly from supermarkets. Although I think some naivety played a part...

Many years ago I recall enjoying a talk delivered by the Deputy Chairman of John Smith & Son, Willie Anderson. Despite being the oldest bookseller in the English speaking world, John Smith moved off the high street many years ago. You are now most likely to encounter them as the university campus bookseller. During his talk Willie made an interesting point about the lack of business sense in the book selling industry; that the NBA had made all book sellers blind to conventional business practice or simple economic principles such as the laws of supply and demand. Said Willie (as best as I can remember! It was well over 10 years ago!):

"Harry Potter and the Chamber of Secrets was anticipated to be a best seller and an extremely popular title. We [John Smith & Son] had large pre-orders from customers. Yet, virtually all other booksellers were slashing prices and offering ridiculous pre-order discounts on an item which commanded a high price. At John Smith we didn't offer any discounts and we sold every copy at full price, precisely because demand was high. This is normal business practice, but most book retailers appear to be oblivious to this. Book retailers have a lot to learn about competition because they have been protected from it for so long. The industry needs to learn quickly otherwise it will suffer economic difficulties in the future".What happens now then? There is certainly money to be made in book selling, particularly with the decline of 'good' stockists. There are more books bought now than at any time in history. Perhaps the time is ripe for a renaissance in the classic independent bookshop, of which Reid of Liverpool is archetypal? Supermarkets do not - I think - occupy the same business space as such book sellers and thus allowing the independent retailer to thrive. There wouldn't be any Costa or Starbucks, nor would it occupy a prime retail site, but I think we'd be all the better for it.

(Photo: Laura-Elizabeth, Flickr, Creative Commons)