University bureaucracy was swallowing up my time when this news broke, otherwise I would have commented sooner... But the leaked announcement by Yahoo! that it has decided to 'sunset' Delicious was momentous, and although Delicious may have a future elsewhere, the news remains significant. It's significant because – along with Flickr, which Yahoo! also owns – Delicious was one of the first services which epitomised the new and mysterious 'Web 2.0' concept, when it emerged in 2004.

In 2004 the concept of organising and sharing links on the Web was fresh and new, and Delicious was really the first to offer an innovative solution to save, organise and share bookmarks with friends. Delicious popularised the use of bookmarklets and practically coined the term 'social bookmarking'. It was probably the first Web 2.0 service to make a domain hack cool and not cheap looking (it was http://del.icio.us/ until 2008, and actually called itself del.icio.us initially).

More interestingly, Delicious popularised tagging and – probably more than any other service at that time – launched an avenue of functionality known as 'social tagging'. In the deluge of social tagging research papers that have been published since Delicious was launched, few will not have cited Delicious within its introduction. And, of course, social tagging - or social bookmarking, or collaborative tagging - has come to be one of the defining aspects of Web 2.0. Social tagging has sent shock waves throughout the Web, influencing the design of subsequent social media services and discovery tools, such as digital libraries. Even the ubiquitous (and infamous) tag cloud - which sister service Flickr invented - was adopted by Delicious and rendered infinitely more useful with the uniquely identifiable resources it and its users curated.

And all of the aforementioned was why Yahoo! decided to acquire Delicious in late 2005 for circa $30 million, in what commentators noted as the first attempt by a Web 1.0 company to jump on to the Web 2.0 bandwagon. Of course, since 2005 many of the original Web 2.0 names have found a Web 1.0 home in which to evolve (e.g. Delicious and Flickr @ Yahoo!; YouTube, Picasa, Blogger @ Google; MySpace @ News Corp; etc.). To be sure, Yahoo! paid too much for Delicious; but Yahoo! weren't buying the technology (which at the time of purchase wasn't particularly complex). They were buying the brand, its users and the Web 2.0 kudos. However, Yahoo! failed to capitalise on all of that, and even failed to harness the underlying technology to make Delicious a household name. One would have thought that an injection of Yahoo! R&D would have made Delicious the most innovative social bookmaking service available, with plenty of horizontal integration with other Yahoo! products. Far from innovating, Delicious has been static since its acquisition. Five years on there are numerous social bookmarking services, virtually all of which are more innovative, more exciting and ultimately more useful.

It is an end of an era to be sure. No-one talks about 'Web 2.0' any more because there is no Web 2.0 to point to, and the demise of Delicious is an example of this. Web 2.0 is now about a handful of social media behemoths. To some extent the 'sunsetting' of Delicious is a comment on the utility of tagging and the value that can be mined from tags, and, ergo, the money that can be made from them. Tightening the use of tags is something which has attracted more attention recently from the CommonTag initiative and rival social bookmarking services such as Faviki and ZigTag. TechCrunch suggest that Yahoo! could have made money from Delicious if they had wanted to and that organisational issues prevented Delicious from being profitable. Perhaps they are right. Only a small team would have been required - but it can't have been easy to make money otherwise Yahoo! would have done it. The moment for Delicious is now gone... And this is an obituary of sorts: can you see any company thinking Delicious is a good investment?

The Information Strategy Group at Liverpool Business School, Liverpool John Moores University, offers courses and undertakes research in areas pertaining to information management, business information systems, communications and public relations, and library and information science.

Showing posts with label social software. Show all posts

Showing posts with label social software. Show all posts

Monday, 24 January 2011

Tuesday, 2 November 2010

Crowd-sourcing faceted information retrieval

This blog has witnessed the demise of several search engines, all of which have attempted to challenge the supremacy of the big innovators - and I would tend to include Yahoo! and Bing before the obvious market leader. Yesterday it was the turn of Blekko to be the next Cuil. Or is it?

Blekko presents a fresh attempt to move web search forward, using a style of retrieval which has hitherto only been successful in systems based on pre-coordinated indexes and combining it with crowd-sourcing techniques. Interestingly, Rich Skrenta - co-founder of Blekko - was also a principal founder of the Dmoz project. Remember Dmoz? When I worked on BUBL years and years ago, I recall considering Dmoz to be an inferior beast. But it remains alive and kicking – and remains popular and relevant to modern web developments with weekly RDF dumps made of its rich, categorised, crowd-sourced content for Linked Data purposes. BUBL, on the other hand, has been static for years.

Flirting with taxonomical organisation and categorisation with Dmoz (as well as crowd-sourcing) has obviously influenced the Blekko approach to search. Blekko provides innovation in retrieval by enabling users to define their very own vertical search indexes using so-called 'slashtags', thus (essentially) providing a quasi form of faceted search. The advantage of this approach is that using a particular slashtag (or facet, if you prefer) in a query increases precision by removing 'irrelevant' results associated with different meanings of the search query terms. Sounds good, eh? Ranganathan would be salivating at such functionality in automatic indexing! To provide some form of critical mass, Blekko has provided hundreds of slashtags that can be used straight away; but the future of slashtags depends on users creating their own, which will be screened by Blekko before being added to their publicly available slashtags list. Blekko users can also assist in weeding out poor results and any erroneous slashtags results (see the video below) thus contributing to the improved precision Blekko purports to have and maintaining slashtag efficacy. In fact, Skrenta proposes that the Blekko approach will improve precision in the longer term. Says Skrenta on the BBC dot.Maggie blog:

…where the /tech-reviews slashtag limits results to genuine reviews published in the technology press and/or associated websites, and the /date slashtag orders the results by date. It works, and works spectacularly well. Skrenta sticks two fingers up at his competitors when in the Blekko promotional video he quips, "Try doing this [type of] search anywhere else!" Blekko provides 'Five use cases where slashtags shine' which - although only using one slashtag - illustrate how the approach can be used in a variety of different queries. Of course, Blekko can still be used like a conventional search engine, e.g. enter a query and get results ranked according to the Blekko algorithm. And on this count – using my own personal 'search engine test queries' - Blekko appears to rank relevant results sensibly and index pages which other search engines either ignore or, if they do index them, normally drown in spam (spam results which these engines rank as more relevant).

There is a lot to admire about Blekko. Aside from an innovative approach to information retrieval, there is also a commitment to algorithm openness and transparency which SEO people will be pleased about; but I worry that while a Blekko slashtag search is innovative and useful, most users will approach Blekko as another search engine rather than buying into the importance of slashtags and, in doing so will not hang around long enough to 'get it' (even though I intend to...). Indeed, to some extent Blekko has more in common with command line searching of the online databases in the days of yore. There are also some teething troubles which rigorous testing can reveal. But there are reasons to be hopeful. Blekko is presumably hoping to promote slashtag popularity and have users following slashtags just as users follow Twitter groups, thus driving website traffic and presumably advertising. Being the owner of that slashtag could be useful, but also highly profitable, even if Blekko remains small.

blekko: how to slash the web from blekko on Vimeo.

Blekko presents a fresh attempt to move web search forward, using a style of retrieval which has hitherto only been successful in systems based on pre-coordinated indexes and combining it with crowd-sourcing techniques. Interestingly, Rich Skrenta - co-founder of Blekko - was also a principal founder of the Dmoz project. Remember Dmoz? When I worked on BUBL years and years ago, I recall considering Dmoz to be an inferior beast. But it remains alive and kicking – and remains popular and relevant to modern web developments with weekly RDF dumps made of its rich, categorised, crowd-sourced content for Linked Data purposes. BUBL, on the other hand, has been static for years.

Flirting with taxonomical organisation and categorisation with Dmoz (as well as crowd-sourcing) has obviously influenced the Blekko approach to search. Blekko provides innovation in retrieval by enabling users to define their very own vertical search indexes using so-called 'slashtags', thus (essentially) providing a quasi form of faceted search. The advantage of this approach is that using a particular slashtag (or facet, if you prefer) in a query increases precision by removing 'irrelevant' results associated with different meanings of the search query terms. Sounds good, eh? Ranganathan would be salivating at such functionality in automatic indexing! To provide some form of critical mass, Blekko has provided hundreds of slashtags that can be used straight away; but the future of slashtags depends on users creating their own, which will be screened by Blekko before being added to their publicly available slashtags list. Blekko users can also assist in weeding out poor results and any erroneous slashtags results (see the video below) thus contributing to the improved precision Blekko purports to have and maintaining slashtag efficacy. In fact, Skrenta proposes that the Blekko approach will improve precision in the longer term. Says Skrenta on the BBC dot.Maggie blog:

"The only way to fix this [precision problem] is to bring back large-scale human curation to search combined with strong algorithms. You have to put people into the mix […] Crowdsourcing is the only way we will be able to allow search to scale to the ever-growing web".Let's look at a typical Blekko query. I am interested in the new Microsoft Windows mobile OS, and in bona fide reviews of the new OS. Moreover, since I am tech savvy and will have read many reviews, I am only interested in reviews published recently (i.e. within the past two weeks, or so). In Blekko we can search like so…

"windows mobile 7" /tech-reviews /date

…where the /tech-reviews slashtag limits results to genuine reviews published in the technology press and/or associated websites, and the /date slashtag orders the results by date. It works, and works spectacularly well. Skrenta sticks two fingers up at his competitors when in the Blekko promotional video he quips, "Try doing this [type of] search anywhere else!" Blekko provides 'Five use cases where slashtags shine' which - although only using one slashtag - illustrate how the approach can be used in a variety of different queries. Of course, Blekko can still be used like a conventional search engine, e.g. enter a query and get results ranked according to the Blekko algorithm. And on this count – using my own personal 'search engine test queries' - Blekko appears to rank relevant results sensibly and index pages which other search engines either ignore or, if they do index them, normally drown in spam (spam results which these engines rank as more relevant).

There is a lot to admire about Blekko. Aside from an innovative approach to information retrieval, there is also a commitment to algorithm openness and transparency which SEO people will be pleased about; but I worry that while a Blekko slashtag search is innovative and useful, most users will approach Blekko as another search engine rather than buying into the importance of slashtags and, in doing so will not hang around long enough to 'get it' (even though I intend to...). Indeed, to some extent Blekko has more in common with command line searching of the online databases in the days of yore. There are also some teething troubles which rigorous testing can reveal. But there are reasons to be hopeful. Blekko is presumably hoping to promote slashtag popularity and have users following slashtags just as users follow Twitter groups, thus driving website traffic and presumably advertising. Being the owner of that slashtag could be useful, but also highly profitable, even if Blekko remains small.

blekko: how to slash the web from blekko on Vimeo.

Monday, 26 July 2010

The end of social networking or just the beginning?

Today the Guardian's Digital Content blog carries an article by Charles Arthur in which we waxes lyrical about the fact that social networking - as a technological and social phenomenon - has reached its apex. As Arthur writes:

However, Arthur's suggestion is that 'standalone' social networking websites are dead, rather than social networking itself. Social networking will, of course, continue; but it will be subsumed into other services as part of a package. How successful these will be is anyone's guess. This situation is contrary to what many commentators forecast several years ago. Commentators predicted an array of competing social networks, some highly specialised and catering for niche interests. Some have already been and gone; some continue to limp on, slowly burning the cash of venture capitalists. Researchers also hoped - and continue to hope - for open applications making greater use of machine readable data on foaf:persons using, erm, FOAF.

The bottom line is that it's simply too difficult to move social networks. For a variety reasons, Identi.ca is generally acknowledged to be an improvement on Twitter, offering greater functionality and open-source credentials (FOAF support anyone?); but persuading people to move is almost impossible. Moving results in a loss of social capital and users' labour, hence recent work in metadata standards to export your social networking capital. Yet, it is not in the interests of most social networks to make users' data portable. Monopolies are therefore always bound to emerge.

But is privacy the elephant in the room? Arthur's article omits the privacy furore which has pervaded Facebook in recent months. German data protection officials have launched a legal assault on Facebook for accessing and saving the personal data of people who don't even use the network, for example. And I would include myself in the group of people one step away from deleting his Facebook account. Enter diaspora: diaspora (what a great name for a social network!) is a "privacy aware, personally controlled, do-it-all, open source social network". The diaspora team vision is very exciting and inspirational. These are, after all, a bunch of NYU graduates with an average age of 20.5 and ace computer hacking skills. Scheduled for a September 2010 launch, diaspora will be a piece of open-source personal web server software designed to enable a distributed and decentralised alternative to services such as Facebook. Nice. So, contrary to Arthur's article, there are a new, innovative, standalone social networks emerging and being built from scratch. diaspora has immense momentum and taps into the increasing suspicion that users have of corporations like Facebook, Google and others.

Sadly, despite the exciting potential of diaspora, I fear they are too late. Users are concerned about privacy. It is a misconception to think that they aren't; but valuing privacy over social capital is a difficult choice for people that lead a virtual existence. Jettison five years of photos, comments, friendships, etc. or tolerate the privacy indiscretions of Facebook (or other social networks)? That's the question that users ask themselves. It again comes down to data portability and the transfer of social capital and/or user labour. diaspora will, I am sure, support many of the standards to make data portability possible, but will Facebook make it possible to output and export your data to diaspora? Probably not. I nevertheless watch the progress of diaspora closely and I hope, just hope they can make it a success. Good luck, chaps!

"I don't think anyone is going to build a social network from scratch whose only purpose is to connect people. We've got Facebook (personal), LinkedIn (business) and Twitter (SMS-length for mobile)."Huh. Maybe he's right? The monopolisation of the social networking market is rather unfortunate and, I suppose, rather unhealthy - but it is probably and ultimately necessary owing to the current business models of social media (i.e. you've got to have a gargantuan user base to turn a profit). The 'big three' (above) have already trampled over the others to get to the top out of necessity.

However, Arthur's suggestion is that 'standalone' social networking websites are dead, rather than social networking itself. Social networking will, of course, continue; but it will be subsumed into other services as part of a package. How successful these will be is anyone's guess. This situation is contrary to what many commentators forecast several years ago. Commentators predicted an array of competing social networks, some highly specialised and catering for niche interests. Some have already been and gone; some continue to limp on, slowly burning the cash of venture capitalists. Researchers also hoped - and continue to hope - for open applications making greater use of machine readable data on foaf:persons using, erm, FOAF.

The bottom line is that it's simply too difficult to move social networks. For a variety reasons, Identi.ca is generally acknowledged to be an improvement on Twitter, offering greater functionality and open-source credentials (FOAF support anyone?); but persuading people to move is almost impossible. Moving results in a loss of social capital and users' labour, hence recent work in metadata standards to export your social networking capital. Yet, it is not in the interests of most social networks to make users' data portable. Monopolies are therefore always bound to emerge.

But is privacy the elephant in the room? Arthur's article omits the privacy furore which has pervaded Facebook in recent months. German data protection officials have launched a legal assault on Facebook for accessing and saving the personal data of people who don't even use the network, for example. And I would include myself in the group of people one step away from deleting his Facebook account. Enter diaspora: diaspora (what a great name for a social network!) is a "privacy aware, personally controlled, do-it-all, open source social network". The diaspora team vision is very exciting and inspirational. These are, after all, a bunch of NYU graduates with an average age of 20.5 and ace computer hacking skills. Scheduled for a September 2010 launch, diaspora will be a piece of open-source personal web server software designed to enable a distributed and decentralised alternative to services such as Facebook. Nice. So, contrary to Arthur's article, there are a new, innovative, standalone social networks emerging and being built from scratch. diaspora has immense momentum and taps into the increasing suspicion that users have of corporations like Facebook, Google and others.

Sadly, despite the exciting potential of diaspora, I fear they are too late. Users are concerned about privacy. It is a misconception to think that they aren't; but valuing privacy over social capital is a difficult choice for people that lead a virtual existence. Jettison five years of photos, comments, friendships, etc. or tolerate the privacy indiscretions of Facebook (or other social networks)? That's the question that users ask themselves. It again comes down to data portability and the transfer of social capital and/or user labour. diaspora will, I am sure, support many of the standards to make data portability possible, but will Facebook make it possible to output and export your data to diaspora? Probably not. I nevertheless watch the progress of diaspora closely and I hope, just hope they can make it a success. Good luck, chaps!

Monday, 28 June 2010

Love me, connect me, Facebook me

This is just a quick post to alert readers to the recent existence of a dedicated MA/MSc/PG Dip. Information & Library Management Facebook group page. The page is for current and prospective ILM students, but should also prove useful to alumni and enable networking between former students and/or other information professionals.

Being a Facebook group page it is accessible to everyone; however, those of you with Facebook accounts can become fans to be kept abreast of programme news, events, research activity, industry developments and so forth. Click the "Like it!" button!

Being a Facebook group page it is accessible to everyone; however, those of you with Facebook accounts can become fans to be kept abreast of programme news, events, research activity, industry developments and so forth. Click the "Like it!" button!

(Image: Luc Legay, Flickr, Creative Commons)

Tuesday, 30 March 2010

Social media and the organic farmer

The latest Food Programme was broadcast yesterday by BBC Radio 4 and made for some interesting listening. (Listen again at iPlayer.) In it Sheila Dillon visited the Food and Drink Expo 2010 at the Birmingham NEC and, rather than discussing the food, her focus was encapsulated in the programme slogan, 'To Tweet or not to Tweet', which was also the name of a panel debate at the Expo. Twitter was not the principal programme focus though. The programme explored social media generally and its use by small farmers and food producers to communicate with customers.

There were some interesting discussions about the fact that online grocery sales grew by 15% last year (three times more than 'traditional' grocery sales) and the role of the web and social media in the disintermediation of supermarkets as the principal means of getting 'artisan foods' to market. Some great success stories were discussed, such as Rude Health and the recently launched Virtual Farmers' Market. However, the familiar problem which the programme highlighted – and the problem which has motivated this blog posting – is the issue of measuring the impact and effectiveness of social media as a marketing tool. Most small businesses had little idea how effective their social media 'strategies' had been and, by the sounds of it, many are randomly tweeting, blogging and setting up Facebook groups in a vein attempt to gain market traction. One commentator (Philip Lynch, Director of Media Evaluation) from Kantar Media Intelligence spoke about tracking "text footprints" left by users on the social web which can then be quantified to determine the level support for a particular product or supplier. He didn't say much more than that, probably because Kantar's own techniques for measuring impact are a form of intellectual property. It sounds interesting though and I would be keen to see it action.

But the whole reason for the 'To Tweet or not to Tweet' discussion in the first place was to explore the opportunities to be gleaned by 'artisan food' producers using social media. These are traditionally small businesses with few capital resources and for whom social media presents a free opportunity to reach potential customers. Yet, the underlying (but barely articulated) theme of many discussions on the Food Programme was that serious investment is required for a social media strategy to be effective. The technology is free to use but it involves staff resource to develop a suitable strategy, and a staff resource with the communications and technical knowledge. On top of all this, small businesses want to be able to observe the impact of their investment on sales and market penetration. Thus, in the end, it requires outfits like Kantor to orchestrate a halfway effective social media strategy, maintain it, and to measure it. Anything short of this will not necessarily help drive sales and may be wholly ineffective. (I can see how social media aficionado Keith Thompson arrived at a name for his blog – any thoughts on this stuff, Keith?) The question therefore presents itself: Are food artisans, or any small business for that matter, being suckered by the false promise of free social media?

Of course, most of the above is predicated upon the assumption that people like me will continue to use social media such as Facebook; but while it continues to update its privacy policy, as it suggested this week on its blog that it will, I will be leaving social media altogether. 'Opting in' for a basic level of privacy should not be necessary.

There were some interesting discussions about the fact that online grocery sales grew by 15% last year (three times more than 'traditional' grocery sales) and the role of the web and social media in the disintermediation of supermarkets as the principal means of getting 'artisan foods' to market. Some great success stories were discussed, such as Rude Health and the recently launched Virtual Farmers' Market. However, the familiar problem which the programme highlighted – and the problem which has motivated this blog posting – is the issue of measuring the impact and effectiveness of social media as a marketing tool. Most small businesses had little idea how effective their social media 'strategies' had been and, by the sounds of it, many are randomly tweeting, blogging and setting up Facebook groups in a vein attempt to gain market traction. One commentator (Philip Lynch, Director of Media Evaluation) from Kantar Media Intelligence spoke about tracking "text footprints" left by users on the social web which can then be quantified to determine the level support for a particular product or supplier. He didn't say much more than that, probably because Kantar's own techniques for measuring impact are a form of intellectual property. It sounds interesting though and I would be keen to see it action.

But the whole reason for the 'To Tweet or not to Tweet' discussion in the first place was to explore the opportunities to be gleaned by 'artisan food' producers using social media. These are traditionally small businesses with few capital resources and for whom social media presents a free opportunity to reach potential customers. Yet, the underlying (but barely articulated) theme of many discussions on the Food Programme was that serious investment is required for a social media strategy to be effective. The technology is free to use but it involves staff resource to develop a suitable strategy, and a staff resource with the communications and technical knowledge. On top of all this, small businesses want to be able to observe the impact of their investment on sales and market penetration. Thus, in the end, it requires outfits like Kantor to orchestrate a halfway effective social media strategy, maintain it, and to measure it. Anything short of this will not necessarily help drive sales and may be wholly ineffective. (I can see how social media aficionado Keith Thompson arrived at a name for his blog – any thoughts on this stuff, Keith?) The question therefore presents itself: Are food artisans, or any small business for that matter, being suckered by the false promise of free social media?

Of course, most of the above is predicated upon the assumption that people like me will continue to use social media such as Facebook; but while it continues to update its privacy policy, as it suggested this week on its blog that it will, I will be leaving social media altogether. 'Opting in' for a basic level of privacy should not be necessary.

Friday, 9 October 2009

Wave a washout?

This is just a brief posting to flag up a review of Google Wave on the BBC dot.life blog.

Google unveiled Wave at their Google I/O conference in late May 2009. The Wave development team presented a lengthy demonstration of what it can do and – given that it was probably a well rehearsed presentation and demo – Wave looked pretty impressive. It might be a little bit boring of me, but I was particularly impressed by the context sensitive spell checker ("Icland is an icland" – amazing!). Those of you that missed that demonstration can check it out in the video below. And try not to get annoyed at the sycophantic applause of their fellow Google developers...

Since then Wave has been hyped up by the technology press and even made mainstream news headlines at the BBC, Channel 4 News, etc. when it went on limited (invitation only) release last week. Dot.life has reviewed Wave and the verdict was not particularly positive. Surprisingly they (Rory Cellan-Jones, Stephen Fry, Bill Thompson and others) found it pretty difficult to use and pretty chaotic. I'm now anxious to try it out myself because I was convinced that it would be pretty amazing. Their review is funny and worth reading in full; but the main issues were noted as follows:

Google unveiled Wave at their Google I/O conference in late May 2009. The Wave development team presented a lengthy demonstration of what it can do and – given that it was probably a well rehearsed presentation and demo – Wave looked pretty impressive. It might be a little bit boring of me, but I was particularly impressed by the context sensitive spell checker ("Icland is an icland" – amazing!). Those of you that missed that demonstration can check it out in the video below. And try not to get annoyed at the sycophantic applause of their fellow Google developers...

Since then Wave has been hyped up by the technology press and even made mainstream news headlines at the BBC, Channel 4 News, etc. when it went on limited (invitation only) release last week. Dot.life has reviewed Wave and the verdict was not particularly positive. Surprisingly they (Rory Cellan-Jones, Stephen Fry, Bill Thompson and others) found it pretty difficult to use and pretty chaotic. I'm now anxious to try it out myself because I was convinced that it would be pretty amazing. Their review is funny and worth reading in full; but the main issues were noted as follows:

"Well, I'm not entirely sure that our attempt to use Google Wave to review Google Wave has been a stunning success. But I've learned a few lessons.My biggest concern about Wave was the important matter of critical mass, and this is something the dot.life review hints at too. A tool like Wave is only ever going to take off if large numbers of people buy into it; if your organisation suddenly dumps all existing communication and collaboration tools in favour of Wave. It's difficult to see that happening any time soon...

First of all, if you're using it to work together on a single document, then a strong leader (backed by a decent sub-editor, adds Fildes) has to take charge of the Wave, otherwise chaos ensues. And that's me - so like it or lump it, fellow Wavers.

Second, we saw a lot of bugs that still need fixing, and no very clear guide as to how to do so. For instance, there is an "upload files" option which will be vital for people wanting to work on a presentation or similar large document, but the button is greyed out and doesn't seem to work.

Third, if Wave is really going to revolutionise the way we communicate, it's going to have to be integrated with other tools like e-mail and social networks. I'd like to tell my fellow Wavers that we are nearly done and ready to roll with this review - but they're not online in Wave right now, so they can't hear me.

And finally, if such a determined - and organised - clutch of geeks and hacks struggle to turn their ripples and wavelets into one impressive giant roller, this revolution is going to struggle to capture the imagination of the masses."

Thursday, 13 August 2009

Trough of disillusionment for microblogging and social software?

The IT research firm Gartner has recently published another of its technology reports for 2009: Gartner's Hype Cycle Special Report for 2009. This report is another in a long line of similar Gartner reports which do exactly what they say on the tin. That is, they provide a technology 'hype cycle' for 2009! Did you see that coming?! The technology hype cycle was a topic that Johnny Read recently discussed at an ISG research reading group, so I thought it was worth commenting on.

According to Gartner - who I believe introduced the concept of the technology hype cycle - the expectations of new or emerging technology grows far more quickly that the technology itself. This is obviously problematic since user expectations get inflated only to be deflated later as the true value of the technology slowly becomes recognised. This true value is normally reached when the technology experiences mainstream use (i.e. plateau of productivity). The figure below illustrates the basic principles behind the hype cycle model.

The latest Gartner hype cycle (below) is interesting - and interesting is really as far as you can go with this because it's unclear how the hype cycles are compiled and whether they can be used for forecasting or as a true indicator of technology trends. Nevertheless, according to the hype cycle 2009, microblogging and social networking are on the decent into the trough of disillusionment.

The latest Gartner hype cycle (below) is interesting - and interesting is really as far as you can go with this because it's unclear how the hype cycles are compiled and whether they can be used for forecasting or as a true indicator of technology trends. Nevertheless, according to the hype cycle 2009, microblogging and social networking are on the decent into the trough of disillusionment.

From a purely personal view this is indeed good news as it might mean I don't have to read about Twitter in virtually every technology newspaper, blog and website for much longer, or be exposed to a woeful interview of the Twitter CEO on Newsnight. But I suppose it is easy to anticipate the plateau of productivity for these technologies. Social software has been around for a while now, and my own experiences would suggest that many people are starting to withdraw from it; the novelty has worn off. And remember, it's not just users that perpetuate the hype cycle, those wishing to harness the social graph for directed advertising, marketing, etc. are probably sliding down the trough of disillisionment too as the promise of a captive audience has not been financially fulfilled.

From a purely personal view this is indeed good news as it might mean I don't have to read about Twitter in virtually every technology newspaper, blog and website for much longer, or be exposed to a woeful interview of the Twitter CEO on Newsnight. But I suppose it is easy to anticipate the plateau of productivity for these technologies. Social software has been around for a while now, and my own experiences would suggest that many people are starting to withdraw from it; the novelty has worn off. And remember, it's not just users that perpetuate the hype cycle, those wishing to harness the social graph for directed advertising, marketing, etc. are probably sliding down the trough of disillisionment too as the promise of a captive audience has not been financially fulfilled.

It's worth perusing the Gartner report itself - interesting. The above summary hype cycle figure doesn't seem to be available at the report, so I've linked to the version available at the BBC dot.life blog which also discusses the report.

According to Gartner - who I believe introduced the concept of the technology hype cycle - the expectations of new or emerging technology grows far more quickly that the technology itself. This is obviously problematic since user expectations get inflated only to be deflated later as the true value of the technology slowly becomes recognised. This true value is normally reached when the technology experiences mainstream use (i.e. plateau of productivity). The figure below illustrates the basic principles behind the hype cycle model.

The latest Gartner hype cycle (below) is interesting - and interesting is really as far as you can go with this because it's unclear how the hype cycles are compiled and whether they can be used for forecasting or as a true indicator of technology trends. Nevertheless, according to the hype cycle 2009, microblogging and social networking are on the decent into the trough of disillusionment.

The latest Gartner hype cycle (below) is interesting - and interesting is really as far as you can go with this because it's unclear how the hype cycles are compiled and whether they can be used for forecasting or as a true indicator of technology trends. Nevertheless, according to the hype cycle 2009, microblogging and social networking are on the decent into the trough of disillusionment. From a purely personal view this is indeed good news as it might mean I don't have to read about Twitter in virtually every technology newspaper, blog and website for much longer, or be exposed to a woeful interview of the Twitter CEO on Newsnight. But I suppose it is easy to anticipate the plateau of productivity for these technologies. Social software has been around for a while now, and my own experiences would suggest that many people are starting to withdraw from it; the novelty has worn off. And remember, it's not just users that perpetuate the hype cycle, those wishing to harness the social graph for directed advertising, marketing, etc. are probably sliding down the trough of disillisionment too as the promise of a captive audience has not been financially fulfilled.

From a purely personal view this is indeed good news as it might mean I don't have to read about Twitter in virtually every technology newspaper, blog and website for much longer, or be exposed to a woeful interview of the Twitter CEO on Newsnight. But I suppose it is easy to anticipate the plateau of productivity for these technologies. Social software has been around for a while now, and my own experiences would suggest that many people are starting to withdraw from it; the novelty has worn off. And remember, it's not just users that perpetuate the hype cycle, those wishing to harness the social graph for directed advertising, marketing, etc. are probably sliding down the trough of disillisionment too as the promise of a captive audience has not been financially fulfilled.It's worth perusing the Gartner report itself - interesting. The above summary hype cycle figure doesn't seem to be available at the report, so I've linked to the version available at the BBC dot.life blog which also discusses the report.

Wednesday, 1 July 2009

When Web 2.0 business models and accessibility collide with information services and e-learning...

Rory Cellan-Jones has today posted his musings on the current state of Facebook at the BBC dot.life blog. His posting was inspired by an interview with Sheryl Sandberg (Chief Operating Officer) and was originally billed as 'Will Facebook ever make any money?'. Sandberg was recruited from Google last year to help Facebook turn a financial corner. According to her interview with Cellan-Jones, Facebook is still failing to break even, but her projections are that Facebook will start to turn a profit by the end of 2010. If true, this will be good news for Facebook. Not everyone believes this of course, including Cellan-Jones judging by his questions, his raised left eye brow and his prediction that tighter EU regulation will harm Facebook growth. Says Cellan-Jones:

The adoption of Facebook, YouTube, MySpace, Twitter (and the rest) within universities has been rapid. Many in the literature and at conferences evangelise about the adoption of these tools as if their use was now mandatory. Nick Woolley voiced sensible concerns over this position. An additional concern that I have – and one I had hoped to verbalise at some point during the proceedings – is whether it is appropriate for services (whether e-learning or digital libraries, or whatever) to be going to the effort of embedding these technologies within curricula or services when they are third party services over which we have little control and when their economic futures are so uncertain.

The magic word at the MmIT event was 'free'. "Make use of this tool – it's free and the kids love it!". Very few of the tools over which LISers and learning technologists get excited about actually have viable business models. Google lost almost $500 million on YouTube in the year up to April 2009 and is unable to turn it into a viable business. MySpace is struggling and slashing staff, Facebook's future remains uncertain, Twitter currently has no business model at all and is being propped up by venture capitalists while it contemplates desperate ways to create revenue, and so the list continues. Will any of these services still be here next year? Well published and straight talking advertising consultant, George Parker, has been pondering the state of social media advertising on his blog recently (warning – he is straight talking and profanities are order of the day!). He has insightful comments to make though on why most of these services are never going to make spectacular amounts of money from their current (failed?) model (i.e. advertising). According to Parker, advertising is just plain wrong. Niche markets where subscriptions are required will be the only way for these services to make decent money...

all and is being propped up by venture capitalists while it contemplates desperate ways to create revenue, and so the list continues. Will any of these services still be here next year? Well published and straight talking advertising consultant, George Parker, has been pondering the state of social media advertising on his blog recently (warning – he is straight talking and profanities are order of the day!). He has insightful comments to make though on why most of these services are never going to make spectacular amounts of money from their current (failed?) model (i.e. advertising). According to Parker, advertising is just plain wrong. Niche markets where subscriptions are required will be the only way for these services to make decent money...

A more general concern relates to the usability and accessibility of social networking services. Very few of them, if any, actually come close to minimal W3C accessibility guidelines, or DDA and the Special Educational Needs and Disability Act (SENDA) 2001. Surely there are legal and ethical questions to be asked, particularly of universities? Embedding these third party services into curricula seems like a good idea but it's one which could potentially exclude students from the same learning experience as others. This is a concern I have had for a few years now, but I had thought it would, a) have been resolved by services voluntarily by now, and, b) institutions wishing to deploy them would have taken measures to resolve it (this might be not using them at all!). Obviously not...

There are many arguments for not engaging with Web 2.0 at university, and - where appropriate - many of these arguments were cogently made at the MmIT conference. But if adopting such technologies is considered to be imperative, should we not be making more of an effort to develop tools that replicate their functionality, thus allowing control over their longevity and accessibility? Attempts at this have hitherto been pooh-poohed on the grounds that interrupting habitual student behaviour (i.e. getting students to switch from, say, Facebook to an academic equivalent) was too onerous, or that replicating the social mass and collaborative appeal of international networking sites couldn't be done within academic environments. But have we really tried hard enough? Most have been half-baked efforts. It is also noteworthy that research conducted by Mike Thelwall and published in JASIST indicates that homophily continues within social networking websites. If this is true, then it is likely that getting students to make the switch to locally hosted equivalents of Facebook or MySpace is certainly possible, particularly as the majority of their network will comprise similar people within similar academic situations.

Perhaps there is more of a need for the wider adoption of social web markup languages, such as the User Labor Markup Language (ULML), to enable users to switch between disparate social networking services whilst simultaneously allowing the portability of social capital (or 'user labour') from one service to another? This would make the decision to adopt academic equivalents far more attractive. However, if this is the case, then more research needs to be undertaken to extend ULML (and other options) to make them fully interoperable with the breadth of services currently available.

I don't like putting a downer on all the innovative and excellent work that the LIS and e-learning communities are doing in this area; it's just that many seem to be oblivious to these threats and are content to carry on regardless. Nothing good ever comes from carrying on regardless, least of all that dreadful tune by the Beautiful South. Let's just talk about it a bit more and actually acknowledge these issues...

"And [another] person I met at Facebook's London office symbolised the firm's determination to deal with its other challenge - regulation.This is all by way of introduction, because a few weeks ago I attended the CILIP MmIT North West day conference on 'Emerging technologies in the library' at LJMU. A series of interesting speakers, including Nick Woolley, Russell Prue and Jane Secker, pondered the use of new technologies in e-learning, digital libraries and other information services. Of course, one of the recurring themes to emerge throughout the day was the innovative use of social networking tools in e-learning or digital library contexts. To be sure, there is some innovative work going on; but none of the speakers addressed two elephants in the room:

Richard Allan, a former Liberal Democrat MP and then director of European government affairs at Cisco, has been hired to lobby European regulators for Facebook.

With the EU mulling over tighter privacy rules for firms that share their users' data, and with continuing concern from politicians about issues like cyber-bullying and hate-speak on social networks, there will be plenty on Mr Allan's plate.

So, yes, Facebook suddenly looks like a mature business, poised for steady progress towards profitability and ready to engage in grown-up conversations about its place in society. Then again, so did MySpace a year ago, until it suddenly went out of fashion."

- Service longevity, and;

- Accessibility

The adoption of Facebook, YouTube, MySpace, Twitter (and the rest) within universities has been rapid. Many in the literature and at conferences evangelise about the adoption of these tools as if their use was now mandatory. Nick Woolley voiced sensible concerns over this position. An additional concern that I have – and one I had hoped to verbalise at some point during the proceedings – is whether it is appropriate for services (whether e-learning or digital libraries, or whatever) to be going to the effort of embedding these technologies within curricula or services when they are third party services over which we have little control and when their economic futures are so uncertain.

The magic word at the MmIT event was 'free'. "Make use of this tool – it's free and the kids love it!". Very few of the tools over which LISers and learning technologists get excited about actually have viable business models. Google lost almost $500 million on YouTube in the year up to April 2009 and is unable to turn it into a viable business. MySpace is struggling and slashing staff, Facebook's future remains uncertain, Twitter currently has no business model at

all and is being propped up by venture capitalists while it contemplates desperate ways to create revenue, and so the list continues. Will any of these services still be here next year? Well published and straight talking advertising consultant, George Parker, has been pondering the state of social media advertising on his blog recently (warning – he is straight talking and profanities are order of the day!). He has insightful comments to make though on why most of these services are never going to make spectacular amounts of money from their current (failed?) model (i.e. advertising). According to Parker, advertising is just plain wrong. Niche markets where subscriptions are required will be the only way for these services to make decent money...

all and is being propped up by venture capitalists while it contemplates desperate ways to create revenue, and so the list continues. Will any of these services still be here next year? Well published and straight talking advertising consultant, George Parker, has been pondering the state of social media advertising on his blog recently (warning – he is straight talking and profanities are order of the day!). He has insightful comments to make though on why most of these services are never going to make spectacular amounts of money from their current (failed?) model (i.e. advertising). According to Parker, advertising is just plain wrong. Niche markets where subscriptions are required will be the only way for these services to make decent money...A more general concern relates to the usability and accessibility of social networking services. Very few of them, if any, actually come close to minimal W3C accessibility guidelines, or DDA and the Special Educational Needs and Disability Act (SENDA) 2001. Surely there are legal and ethical questions to be asked, particularly of universities? Embedding these third party services into curricula seems like a good idea but it's one which could potentially exclude students from the same learning experience as others. This is a concern I have had for a few years now, but I had thought it would, a) have been resolved by services voluntarily by now, and, b) institutions wishing to deploy them would have taken measures to resolve it (this might be not using them at all!). Obviously not...

There are many arguments for not engaging with Web 2.0 at university, and - where appropriate - many of these arguments were cogently made at the MmIT conference. But if adopting such technologies is considered to be imperative, should we not be making more of an effort to develop tools that replicate their functionality, thus allowing control over their longevity and accessibility? Attempts at this have hitherto been pooh-poohed on the grounds that interrupting habitual student behaviour (i.e. getting students to switch from, say, Facebook to an academic equivalent) was too onerous, or that replicating the social mass and collaborative appeal of international networking sites couldn't be done within academic environments. But have we really tried hard enough? Most have been half-baked efforts. It is also noteworthy that research conducted by Mike Thelwall and published in JASIST indicates that homophily continues within social networking websites. If this is true, then it is likely that getting students to make the switch to locally hosted equivalents of Facebook or MySpace is certainly possible, particularly as the majority of their network will comprise similar people within similar academic situations.

Perhaps there is more of a need for the wider adoption of social web markup languages, such as the User Labor Markup Language (ULML), to enable users to switch between disparate social networking services whilst simultaneously allowing the portability of social capital (or 'user labour') from one service to another? This would make the decision to adopt academic equivalents far more attractive. However, if this is the case, then more research needs to be undertaken to extend ULML (and other options) to make them fully interoperable with the breadth of services currently available.

I don't like putting a downer on all the innovative and excellent work that the LIS and e-learning communities are doing in this area; it's just that many seem to be oblivious to these threats and are content to carry on regardless. Nothing good ever comes from carrying on regardless, least of all that dreadful tune by the Beautiful South. Let's just talk about it a bit more and actually acknowledge these issues...

Friday, 26 June 2009

Read all about it: interesting contributions at ISKO-UK 2009

I had the pleasure of attending the ISKO-UK 2009 conference earlier this week at University College London (UCL), organised in association with the Department of Information Studies. This was my first visit to the home of the architect of Utilitarianism, Jeremy Bentham, and the nearby St. Pancras International since it has been revamped - and what a smart train station it is.

The ISKO conference theme was 'content architecture', with a particular focus on:

tic Web inspired approaches to resolving or ameliorating common problems within our disciplines. There were a great many interesting papers delivered and it is difficult to say something about them all; however, for me, there were particular highlights (in no particular order)...

tic Web inspired approaches to resolving or ameliorating common problems within our disciplines. There were a great many interesting papers delivered and it is difficult to say something about them all; however, for me, there were particular highlights (in no particular order)...

Libo Eric Si (et al.) from the Department of Information Science at Loughborough University described research to develop a prototype middleware framework between disparate terminology resources to facilitate subject cross-browsing of information and library portal systems. A lot of work has already been undertaken in this area (see for example, HILT project (a project in which I used to be involved), and CrissCross), so it was interesting to hear about his 'bag' approach in which – rather than using precise mappings between different Knowledge Organisation Systems (KOS) (e.g. thesauri, subject heading lists, taxonomies, etc.) - "a number of relevant concepts could be put into a 'bag', and the bag is mapped to an equivalent DDC concept. The bag becomes a very abstract concept that may not have a clear meaning, but based on the evaluation findings, it was widely-agreed that using a bag to combine a number of concepts together is a good idea".

Brian Matthews (et al.) reported on an evaluation of social tagging and KOS. In par ticular, they investigated ways of enhancing social tagging via KOS, with a view to improving the quality of tags for improvements in and retrieval performance. A detailed and robust methodology was provided, but essentially groups of participants were given the opportunity to tag resources using tags, controlled terms (i.e. from KOS), or terms displayed in a tag cloud, all within a specially designed demonstrator. Participants were later asked to try alternative tools in order to gather data on the nature of user preferences. There are numerous findings - and a pre-print of the paper is already available on the conference website so you can read these yourself - but the main ones can be summarised from their paper as follows and were surprising in some cases:

ticular, they investigated ways of enhancing social tagging via KOS, with a view to improving the quality of tags for improvements in and retrieval performance. A detailed and robust methodology was provided, but essentially groups of participants were given the opportunity to tag resources using tags, controlled terms (i.e. from KOS), or terms displayed in a tag cloud, all within a specially designed demonstrator. Participants were later asked to try alternative tools in order to gather data on the nature of user preferences. There are numerous findings - and a pre-print of the paper is already available on the conference website so you can read these yourself - but the main ones can be summarised from their paper as follows and were surprising in some cases:

o make assumptions about what the image might be. Of course, using any major search engine we discover that this approach is woefully inaccurate. Dr. Town has developed improved approaches to content-based image retrieval (CBIR) which provide a novel way of bridging the 'semantic gap' between the retrieval model used by the system and that of the user. His approach is founded on the "notion of an ontological query language, combined with a set of advanced automated image analysis and classification models". This approach has been so successful that he has founded his own company, Imense. The difference in performance between Imense and Google is staggering and has to been seen to be believed. Examples can be found in his presentation slides (which will be on the ISKO website soon), but can observed from simply messing around on the Imense Picture Search.

o make assumptions about what the image might be. Of course, using any major search engine we discover that this approach is woefully inaccurate. Dr. Town has developed improved approaches to content-based image retrieval (CBIR) which provide a novel way of bridging the 'semantic gap' between the retrieval model used by the system and that of the user. His approach is founded on the "notion of an ontological query language, combined with a set of advanced automated image analysis and classification models". This approach has been so successful that he has founded his own company, Imense. The difference in performance between Imense and Google is staggering and has to been seen to be believed. Examples can be found in his presentation slides (which will be on the ISKO website soon), but can observed from simply messing around on the Imense Picture Search.

Chris Town's second paper essentially explored how best to do the CBIR image processing required for the retrieval system. According to Dr. Town there are approximately 20 billion images on the web, with the majority at a high resolution, meaning that by his calculation it would take 4000 years to undertake the necessary CBIR processing to facilitate retrieval! Phew! Large-scale grid computing options therefore have to be explored if the approach is to be scalable. Chris Town and his colleague Karl Harrison therefore undertook a series of CBIR processing evaluations by distributing the required computational task across thousands of Grid nodes. This distributed approach resulted in the processing of over 25 million high resolution images in less than two weeks, thus making grid processing a scalable option for CBIR.

Andreas Vlachidis (et al.) from the Hypermedia Research Unit at the University of Glamorgan described the use of 'information extraction' techniques employing Natural Language Processing (NLP) techniques to assist in the semantic indexing of archaeological text resources. Such 'Grey Literature' is a good tes t bed as more established indexing techniques are insufficient in meeting user needs. The aim of the research is to create a system capable of being "semantically aware" during document indexing. Sounds complicated? Yes – a little. Vlachidis is achieving this by using a core cultural heritage ontology and the English Heritage Thesauri to support the 'information extraction' process and which supports "a semantic framework in which indexed terms are capable of supporting semantic-aware access to on-line resources".

t bed as more established indexing techniques are insufficient in meeting user needs. The aim of the research is to create a system capable of being "semantically aware" during document indexing. Sounds complicated? Yes – a little. Vlachidis is achieving this by using a core cultural heritage ontology and the English Heritage Thesauri to support the 'information extraction' process and which supports "a semantic framework in which indexed terms are capable of supporting semantic-aware access to on-line resources".

Perhaps the most interesting aspect of the conference was that it was well attended by people outside the academic fraternity, and as such there were papers on how these organisations are doing innovative work with a range of technologies, specifications and standards which, to a large extent, remain the preserve of researchers and academics. Papers were delivered by technical teams at the World Bank and Dow Jones, for example. Perhaps the most interesting contribution from the 'real world' though was that delivered by Tom Scott, a key member of the BBC's online and technology team. Tom is a key proponent of the Semantic Web and Linked Data at the BBC and his presentation threw light on BBC activity in this area – and rather coincidentally complemented an accidental discovery I made a few weeks ago.

Tom currently leads the BBC Earth project which aims to bring more of the BBC's Natural History content online and bring the BBC into the Linked Data cloud, thus enabling intelligent linking, re-use, re-aggregation, with what's already available. He provided interesting examples of how the BBC was exposing structured data about all forms of BBC programming on the Web by adopting a Linked Data approach and he expressed a desire for users to traverse detailed and well connected RDF graphs. Says Tom on his blog:

detailed and well connected RDF graphs. Says Tom on his blog:

Pre-print papers from the conference are available on the proceedings page of the ISKO-UK 2009 website; however, fully peer reviewed and 'added value' papers from the conference are to be published in a future issue of Aslib Proceedings.

The ISKO conference theme was 'content architecture', with a particular focus on:

- "Integration and semantic interoperability between diverse resources – text, images, audio, multimedia

- Social networking and user participation in knowledge structuring

- Image retrieval

- Information architecture, metadata and faceted frameworks"

tic Web inspired approaches to resolving or ameliorating common problems within our disciplines. There were a great many interesting papers delivered and it is difficult to say something about them all; however, for me, there were particular highlights (in no particular order)...

tic Web inspired approaches to resolving or ameliorating common problems within our disciplines. There were a great many interesting papers delivered and it is difficult to say something about them all; however, for me, there were particular highlights (in no particular order)...Libo Eric Si (et al.) from the Department of Information Science at Loughborough University described research to develop a prototype middleware framework between disparate terminology resources to facilitate subject cross-browsing of information and library portal systems. A lot of work has already been undertaken in this area (see for example, HILT project (a project in which I used to be involved), and CrissCross), so it was interesting to hear about his 'bag' approach in which – rather than using precise mappings between different Knowledge Organisation Systems (KOS) (e.g. thesauri, subject heading lists, taxonomies, etc.) - "a number of relevant concepts could be put into a 'bag', and the bag is mapped to an equivalent DDC concept. The bag becomes a very abstract concept that may not have a clear meaning, but based on the evaluation findings, it was widely-agreed that using a bag to combine a number of concepts together is a good idea".

Brian Matthews (et al.) reported on an evaluation of social tagging and KOS. In par

ticular, they investigated ways of enhancing social tagging via KOS, with a view to improving the quality of tags for improvements in and retrieval performance. A detailed and robust methodology was provided, but essentially groups of participants were given the opportunity to tag resources using tags, controlled terms (i.e. from KOS), or terms displayed in a tag cloud, all within a specially designed demonstrator. Participants were later asked to try alternative tools in order to gather data on the nature of user preferences. There are numerous findings - and a pre-print of the paper is already available on the conference website so you can read these yourself - but the main ones can be summarised from their paper as follows and were surprising in some cases:

ticular, they investigated ways of enhancing social tagging via KOS, with a view to improving the quality of tags for improvements in and retrieval performance. A detailed and robust methodology was provided, but essentially groups of participants were given the opportunity to tag resources using tags, controlled terms (i.e. from KOS), or terms displayed in a tag cloud, all within a specially designed demonstrator. Participants were later asked to try alternative tools in order to gather data on the nature of user preferences. There are numerous findings - and a pre-print of the paper is already available on the conference website so you can read these yourself - but the main ones can be summarised from their paper as follows and were surprising in some cases:- "Users appreciated the benefits of consistency and vocabulary control and were potentially willing to engage with the tagging system;

- There was evidence of support for automated suggestions if they are appropriate and relevant;

- The quality and appropriateness of the controlled vocabulary proved to be important;

- The main tag cloud proved problematic to use effectively; and,

- The user interface proved important along with the visual presentation and interaction sequence."

"There was general sentiment amongst the depositors that choosing terms from a controlled vocabulary was a "Good Thing" and better than choosing their own terms. The subjects could overall see the value of adding terms for information retrieval purposes, and could see the advantages of consistency of retrieval if the terms used are from an authoritative source."Chris Town from the University of Cambridge Computer Laboratory presented two (see [1], [2]) equally interesting papers relating to image retrieval on the Web. Although images and video now comprise the majority of Web content, the vast majority of retrieval systems essentially use text, tags, etc. that surround images in order t

Chris Town's second paper essentially explored how best to do the CBIR image processing required for the retrieval system. According to Dr. Town there are approximately 20 billion images on the web, with the majority at a high resolution, meaning that by his calculation it would take 4000 years to undertake the necessary CBIR processing to facilitate retrieval! Phew! Large-scale grid computing options therefore have to be explored if the approach is to be scalable. Chris Town and his colleague Karl Harrison therefore undertook a series of CBIR processing evaluations by distributing the required computational task across thousands of Grid nodes. This distributed approach resulted in the processing of over 25 million high resolution images in less than two weeks, thus making grid processing a scalable option for CBIR.

Andreas Vlachidis (et al.) from the Hypermedia Research Unit at the University of Glamorgan described the use of 'information extraction' techniques employing Natural Language Processing (NLP) techniques to assist in the semantic indexing of archaeological text resources. Such 'Grey Literature' is a good tes

t bed as more established indexing techniques are insufficient in meeting user needs. The aim of the research is to create a system capable of being "semantically aware" during document indexing. Sounds complicated? Yes – a little. Vlachidis is achieving this by using a core cultural heritage ontology and the English Heritage Thesauri to support the 'information extraction' process and which supports "a semantic framework in which indexed terms are capable of supporting semantic-aware access to on-line resources".

t bed as more established indexing techniques are insufficient in meeting user needs. The aim of the research is to create a system capable of being "semantically aware" during document indexing. Sounds complicated? Yes – a little. Vlachidis is achieving this by using a core cultural heritage ontology and the English Heritage Thesauri to support the 'information extraction' process and which supports "a semantic framework in which indexed terms are capable of supporting semantic-aware access to on-line resources".Perhaps the most interesting aspect of the conference was that it was well attended by people outside the academic fraternity, and as such there were papers on how these organisations are doing innovative work with a range of technologies, specifications and standards which, to a large extent, remain the preserve of researchers and academics. Papers were delivered by technical teams at the World Bank and Dow Jones, for example. Perhaps the most interesting contribution from the 'real world' though was that delivered by Tom Scott, a key member of the BBC's online and technology team. Tom is a key proponent of the Semantic Web and Linked Data at the BBC and his presentation threw light on BBC activity in this area – and rather coincidentally complemented an accidental discovery I made a few weeks ago.

Tom currently leads the BBC Earth project which aims to bring more of the BBC's Natural History content online and bring the BBC into the Linked Data cloud, thus enabling intelligent linking, re-use, re-aggregation, with what's already available. He provided interesting examples of how the BBC was exposing structured data about all forms of BBC programming on the Web by adopting a Linked Data approach and he expressed a desire for users to traverse

detailed and well connected RDF graphs. Says Tom on his blog:

detailed and well connected RDF graphs. Says Tom on his blog:"To enable the sharing of this data in a structured way, we are using the linked data approach to connect and expose resources i.e. using web technologies (URLs and HTTP etc.) to identify and link to a representation of something, and that something can be person, a programme or an album release. These resources also have representations which can be machine-processable (through the use of RDF, Microformats, RDFa, etc.) and they can contain links for other web resources, allowing you to jump from one dataset to another."Whilst Tom conceded that this work is small compared to the entire output and technical activity at the BBC, it still constitutes a huge volume of data and is significant owing to the BBC's pre-eminence in broadcasting. Tom even reported that a SPARQL end point will be made available to query this data. I had actually hoped to ask Tom a few questions during the lunch and coffee breaks, but he was such a popular guy that in the end I lost my chance, such is the existence of a popular techie from the Beeb.

Pre-print papers from the conference are available on the proceedings page of the ISKO-UK 2009 website; however, fully peer reviewed and 'added value' papers from the conference are to be published in a future issue of Aslib Proceedings.

Tuesday, 7 April 2009

Web 2.0? Show me the money!

Just a quick post... Today the Guardian blog reports on the financial woes of YouTube. I don't suppose we should be particularly surprised to learn that according to some news sources YouTube is due to drop $470 million this year. When this figure is compared to the $1.65 billion pricetag Google paid a couple of years ago we can appreciate the magnitude of their YouTube predicament. The majority of this loss is attributable to the failure of advertising to bring home the bacon; a recurring issue on this blog. But huge running costs, copyright and royalty issues have played their part too. Google is reportedly interested in purchasing Twitter, but surely their failure to monetise YouTube - a service arguably more monetiseable (?) than Twitter - should have the alarm bells ringing at Google HQ?

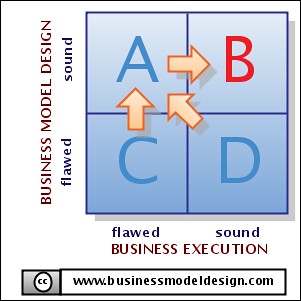

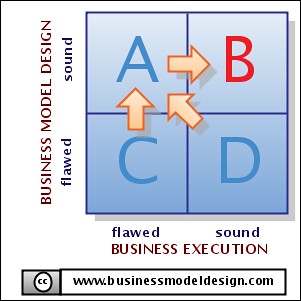

I find the current crossroads for many of these services utterly fascinating. I don't have any solutions for any of these ventures, other than to make sure you have a business model before starting any business. Would RBS give me a business loan without a business plan and a robust revenue model? Probably not. But then they are not giving loans out these days anyway...

I find the current crossroads for many of these services utterly fascinating. I don't have any solutions for any of these ventures, other than to make sure you have a business model before starting any business. Would RBS give me a business loan without a business plan and a robust revenue model? Probably not. But then they are not giving loans out these days anyway...

Thursday, 12 February 2009

FOAF and political social graphs

While catching up on some blogs I follow, I noticed that the Semantic Web-ite Ivan Herman posted comments regarding the US Congress SpaceBook – a US political answer to Facebook. He, in turn, was commenting on a blog made by the ProgrammableWeb – the website dedicated to keeping us informed of the latest web services, mashups, and Web 2.0 APIs.

From a mashup perspective, SpaceBook is pretty incredible, incorporating (so far) 11 different Web APIs. However, for me SpaceBook is interesting because it makes use of semantic data provided via FOAF and the microformat, XFN. To do this SpaceBook makes good use of the Google Social Graph API, which aims to harness such data to generate social graphs. The Social Graph API has been available for almost a year but has had quite a low profile until now. Says the API website:

From a mashup perspective, SpaceBook is pretty incredible, incorporating (so far) 11 different Web APIs. However, for me SpaceBook is interesting because it makes use of semantic data provided via FOAF and the microformat, XFN. To do this SpaceBook makes good use of the Google Social Graph API, which aims to harness such data to generate social graphs. The Social Graph API has been available for almost a year but has had quite a low profile until now. Says the API website:

"Google Search helps make this information more accessible and useful. If you take away the documents, you're left with the connections between people. Information about the public connections between people is really useful -- as a user, you might want to see who else you're connected to, and as a developer of social applications, you can provide better features for your users if you know who their public friends are. There hasn't been a good way to access this information. The Social Graph API now makes information about the public connections between people on the Web, expressed by XFN and FOAF markup and other publicly declared connections, easily available and useful for developers."Bravo! This creates some neat connections. Unfortunately – and as Ivan Herman regrettably notes - the generated FOAF data is inserted into Hilary Clinton’s page as a page comment, rather than as a separate .rdf file or as RDFa. The FOAF file is also a little limited, but it does include links to her Twitter account. More puzzling for me though is why the embedded XHTML metadata does not use Qualified Dublin Core! Let's crank up the interoperability, please!

Friday, 30 January 2009

Wikipedia: the new Knol?

Like many people I use Wikipedia quite regularly to check random facts. The strange aspect of this behaviour is that once I find the relevant fact, I have to immediately verify its provenance by conducting subsequent searches in order to find corroborative sources. It makes one wonder why one would use it in the first place.

Wikipedia continues to be plagued by a series of high profile malicious edits. Unfortunately, many of these edits aren't necessarily malicious. They are just wrong or inaccurate. There are probably hundreds of thousands of inaccurate Wikipedia articles, perhaps just as many hosting malicious edits; but it takes high profile gaffs to affect real change. On the day of Barack Obama's inauguration, Wikipedia reported the deaths of West Virginia's Robert Byrd and Edward Kennedy, who had collapsed during the inaugural lunch. Both reports were false.

This event appears to have compelled Jimmy Wales into being more proactive in improving the accuracy and reliability of Wikipedia. Under his proposals many future changes to articles would need to be approved by a group of vetted editors before being published. For me this news is interesting, particularly as it emerges barely two weeks after Google announced that the 100,000th Knol had been created on their 'authoritative and credible' answer to Wikipedia: Knol. Six or seven months ago the discussions focussed on how Knol was the new Wikipedia, now it appears as if Wikipedia might become the new Knol. How bizarre is that?!

Tightening the editing rules of Wikipedia has been on the agenda before and in 2007 this blog discussed how the German Wikipedia was conducting experiments which saw only trusted Wikipedians verifying changes to articles. So, will tightening the editing of Wikipedia make it the new Knol? The short answer is 'no'. Some existing Wikipedia editors can already exert authoritarian control over particular articles and can – in some cases – give the impression that they too have an axe to grind on particular topics. 'Wikipinochets' anyone? Moreover, Knol benefits from its "moderated collaboration" approach, with Knols being created by subject experts whose credentials have been verified. Wikipedia isn't going anywhere near this. Guardian columnist, Marcel Berlins, is probably right about Wikipedia when he states:

Wikipedia continues to be plagued by a series of high profile malicious edits. Unfortunately, many of these edits aren't necessarily malicious. They are just wrong or inaccurate. There are probably hundreds of thousands of inaccurate Wikipedia articles, perhaps just as many hosting malicious edits; but it takes high profile gaffs to affect real change. On the day of Barack Obama's inauguration, Wikipedia reported the deaths of West Virginia's Robert Byrd and Edward Kennedy, who had collapsed during the inaugural lunch. Both reports were false.